It is no secret that industries are increasingly relying on robotics for efficiency and accuracy. From manufacturing to healthcare, robots are being integrated into various fields to streamline processes and improve outcomes. As per a study, the global robotics market is expected to reach $165.2 billion by the end of 2029, growing at a compound annual growth rate (CAGR) of 16.1%.

However, one major challenge that has been faced, despite the growth of the robotics industry, is the ability of robots to accurately perceive their surroundings.

Perception is paramount for any robot, enabling it to make informed decisions based on its operating environment. While single sensor perception systems, such as cameras or LiDARs (light detection and ranging) have limitations in terms of collecting and perceiving diverse data. This is where multi-sensor fusion techniques come into play, providing a comprehensive understanding of the surroundings.

Multi-sensor fusion involves combining data from multiple sensors to create a more comprehensive and accurate understanding of the environment. Using multiple sensors, robots can gather a wider range of information and create a more detailed map of their surroundings.

In this blog post, we explore how multi-sensor fusion is revolutionizing perception and shaping the future of robotics.

Sensors Commonly Used in Robotics

Sensors are the eyes, ears, and tactile inputs of robots, providing the data they need to perceive and interact with their environment. The various types of sensors used today in robots and autonomous vehicles include cameras, LiDAR, IMUs (Inertial Measurement Units), radar, sonar, and tactile sensors.

Cameras

Cameras, specifically embedded vision cameras, are essential for capturing visual data, enabling robots to recognize objects, track motion, and navigate complex environments. However, cameras by themselves do not provide depth information, and cameras alone may struggle in low-light or obscured conditions.

LiDAR

LiDAR (Light Detection and Ranging) uses laser pulses to measure distances and create precise 3D maps of the surroundings. LiDAR excels in providing depth perception but can be affected by weather conditions like heavy rain or fog.

IMUs (Inertial Measurement Units)

IMU monitors a robot’s orientation, motion, and acceleration, offering real-time feedback for balance and stability. However, relying solely on IMUs can lead to errors over time due to sensor drift, as they don’t provide information about the robot’s absolute position in space.

Radar and Sonar

Radar uses radio waves, and sonar employs sound waves to detect objects in conditions where cameras and LiDAR struggle. These sensors provide reliable distance measurements but may lack the resolution of LiDAR or cameras.

Tactile Sensors

Tactile sensors mimic the human sense of touch, enabling robots to detect pressure, texture, and temperature. However, relying on tactile sensors alone may not provide enough contextual awareness of a robot’s surroundings.

Understanding Multi-Sensor Fusion

Multi-sensor fusion is the process of integrating data from various sensors to create a more accurate and reliable perception of the environment. By combining the strengths of different sensor types, robots can overcome the limitations of individual sensors and achieve a more comprehensive understanding of their surroundings.

The fusion of multiple sensors allows robots to cross-check data, validate information, and fill in gaps, resulting in more robust and reliable decision-making capabilities. Different fusion techniques (which we will investigate in detail later), such as Kalman filters, particle filters, or deep learning-based approaches, are used to combine sensor data in real-time.

This technique not only enhances the robot’s understanding of its environment but also improves its ability to respond to dynamic changes, making robots smarter and safer for a wide range of applications.

Benefits of Multi-Sensor Fusion

Multi-sensor fusion offers several advantages that significantly enhance robotic perception, accuracy, and overall functionality.

Improved Accuracy and Reliability

One of the most significant advantages of multi-sensor fusion is the increased accuracy and reliability it brings to robotic systems. Individual sensors have limitations, such as susceptibility to environmental factors, signal interference, or inaccuracies in measurement. When data from multiple sensors is fused, the weaknesses of each sensor are mitigated.

Enhanced Environmental Perception

Robots must navigate dynamic environments, which can be challenging when relying on a single sensor. Multi-sensor fusion allows the robot to perceive obstacles, people, and objects with higher accuracy, even in challenging environments. This expanded environmental awareness is crucial for applications like smart cars, drones, and industrial robots.

Greater Flexibility and Adaptability

With the integration of multiple sensors, robots can become more adaptable to various scenarios. By relying on different types of sensors suited for different tasks or environments, robots can adjust their perception and behavior accordingly. This flexibility allows robots to handle a wide range of tasks, from complex industrial automation to autonomous navigation in unpredictable environments.

Robustness in Dynamic Environments

Robots often operate in environments that change rapidly, such as factories, hospitals, or outdoor settings. The integration of real-time data from different sensors allows robots to adapt to dynamic conditions and make quicker, more accurate decisions. This robustness is especially beneficial in applications requiring high levels of precision and safety. For example, robotic surgery, search and rescue operations, or drone navigation in forests.

Increased Safety

Safety is paramount when it comes to robotics, especially in environments with humans. Multi-sensor fusion significantly enhances safety by ensuring that robots can detect obstacles and hazards with greater accuracy. This leads to fewer accidents and better overall safety in operations.

Techniques for Multi-Sensor Fusion

Kalman Filtering

Kalman filtering is one of the most popular techniques for sensor fusion, particularly for tasks involving estimation and prediction. It is an algorithm that uses a series of measurements observed over time, incorporating statistical noise and uncertainty, to predict the state of a dynamic system. In robotics, Kalman filters are typically used to fuse data from IMUs and other motion sensors to estimate the position and velocity of the robot.

Kalman filters work in two main steps: prediction and correction. In the prediction step, the system uses previous knowledge of the robot’s state (such as velocity and position) to predict its next state. The correction step adjusts the prediction based on the new sensor data, helping refine the estimate. This process is repeated continuously to ensure the robot’s position and motion are as accurate as possible.

However, Kalman filters assume that the noise in the system follows a Gaussian distribution, which may not always be the case. As a result, modifications like Extended Kalman Filters (EKF) or Unscented Kalman Filters (UKF) are used when dealing with nonlinear systems, such as robots moving through complex terrains.

Particle Filtering

Particle filtering, also known as Monte Carlo Localization (MCL), is another widely used technique in robotics, especially for localization and mapping. It uses a large number of “particles” (samples) to represent possible states of the system. Each particle represents a hypothesis of the robot’s location. Over time, the particles are updated based on sensor data to reflect the robot’s actual state.

Particle filters are useful in environments where the robot’s motion is highly non-linear or when sensor measurements are unreliable. For example, in situations with poor GPS signals or when the robot operates in highly dynamic environments, particle filters can handle more complex situations compared to Kalman filters.

By using a set of particles to approximate the robot’s position, the system can account for more uncertainty and provide more robust results in challenging conditions. However, particle filtering can be computationally expensive, especially in large-scale environments with numerous sensors.

Data Association and Sensor Alignment

Data association refers to the process of matching sensor measurements with objects in the environment. This is particularly important in applications like simultaneous localization and mapping (SLAM), where the robot needs to identify and track objects from multiple sensors simultaneously.

One common technique for data association is nearest neighbor matching, where the system compares incoming sensor data with previously observed data to find the best match. Joint Compatibility Branch and Bound (JCBB) is a more advanced approach. This method combines multiple hypotheses and uses probabilistic methods to validate the correctness of each match.

Effective data association and sensor alignment are essential for accurate object detection and mapping. Incorrect associations can lead to significant errors in the robot’s understanding of its environment.

Sensor Fusion via Deep Learning

Deep learning methods, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have gained popularity in recent years for multi-sensor fusion. These techniques can be used to learn complex patterns in sensor data, allowing robots to combine data from different sensors in a way that mimics human perception.

CNNs, for instance, are particularly useful when working with visual and spatial data. When applied to multi-sensor fusion, CNNs can take input from a combination of sensors such as cameras, LiDAR, and radar, and learn to extract features from them to produce a more comprehensive understanding of the environment.

Further Reading: Hornbill: A Self-Evaluating Hydro-Blasting Reconfigurable Robot for Ship Hull Maintenance

RNNs, on the other hand, are well-suited for processing time-series data, such as sensor readings over time, and can be used to predict the robot’s movement or the dynamic state of the environment. The advantage of using deep learning for sensor fusion is that it can automatically learn the best ways to combine data from multiple sensors without the need for manually designed models.

Multi-View Geometry

Multi-view geometry combines data from different viewpoints (e.g., multiple cameras or LiDAR sensors) to create a more complete 3D map of the environment. This technique is essential in applications where depth perception is important, such as autonomous vehicles and industrial robots working in cluttered environments.

By combining data from multiple views, multi-view geometry algorithms can detect and track objects more reliably and can also be used to build accurate 3D models of the environment. These algorithms often rely on techniques like stereo vision (using two cameras) or structure from motion (SfM) to compute depth and create 3D reconstructions from multiple images or sensor readings.

The primary challenge of multi-view geometry is that it requires precise calibration of the sensors and the ability to handle large datasets efficiently. However, with the continued advancements in computer vision and machine learning, this technique is becoming more widely adopted in robotics.

Future Trends in Sensor Fusion for Robotics

Below are some key trends and future directions in sensor fusion for robotics.

Integration of Artificial Intelligence (AI) and Machine Learning

The integration of AI and machine learning with sensor fusion is one of the most promising trends in the field of robotics. As robots collect data from a variety of sensors, AI algorithms—especially deep learning models—will be used to analyze, interpret, and combine this data in more sophisticated ways.

AI can help improve sensor fusion by:

- Learning complex patterns: AI models can learn to identify and interpret complex sensor data patterns, such as recognizing objects or predicting robot behavior in dynamic environments.

- Real-time adaptation: Machine learning can enable robots to adapt in real-time to changes in the environment, improving decision-making even under uncertain or noisy conditions.

Also Read: Applications and Advancements of AI in Robotics

Miniaturization and Low-Cost Sensors

As the cost of sensors continues to decrease and their size reduces, we can expect to see robots equipped with an increasing number of smaller and more affordable sensors. The result will be more compact, efficient, and cost-effective robotic systems that can still offer highly accurate perception and decision-making through multi-sensor fusion.

5G and Cloud Integration

The rollout of 5G networks will significantly improve sensor fusion capabilities, particularly in the context of cloud-based robotics.

Key advantages of 5G and cloud integration include:

- Remote sensing and control: Robots equipped with a variety of sensors can transmit data to centralized cloud systems faster where advanced fusion algorithms process the data in real-time.

- Data sharing between robots: Cloud-based sensor fusion will allow multiple robots to share and integrate data, improving situational awareness across fleets of autonomous robots.

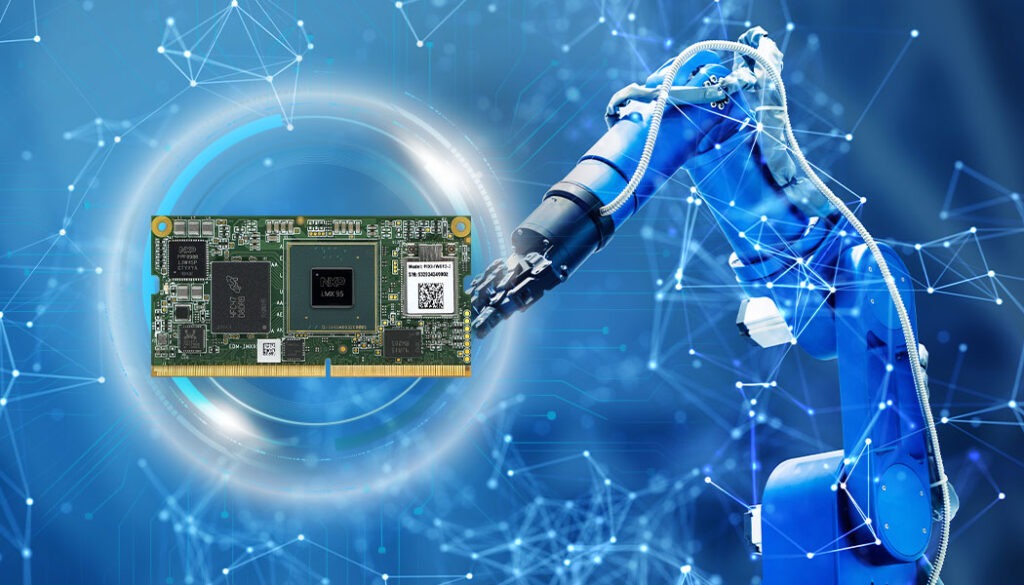

TechNexion’s Embedded Solutions and Sensor Fusion

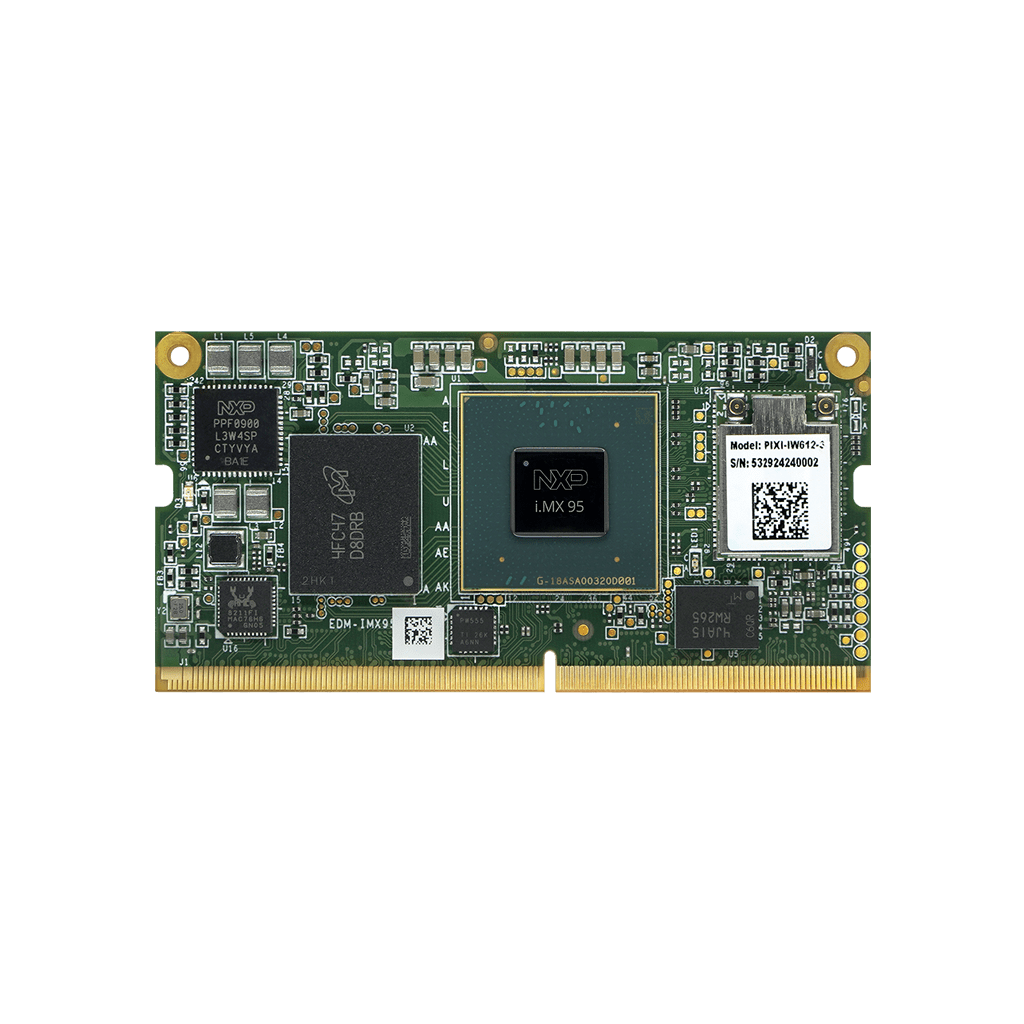

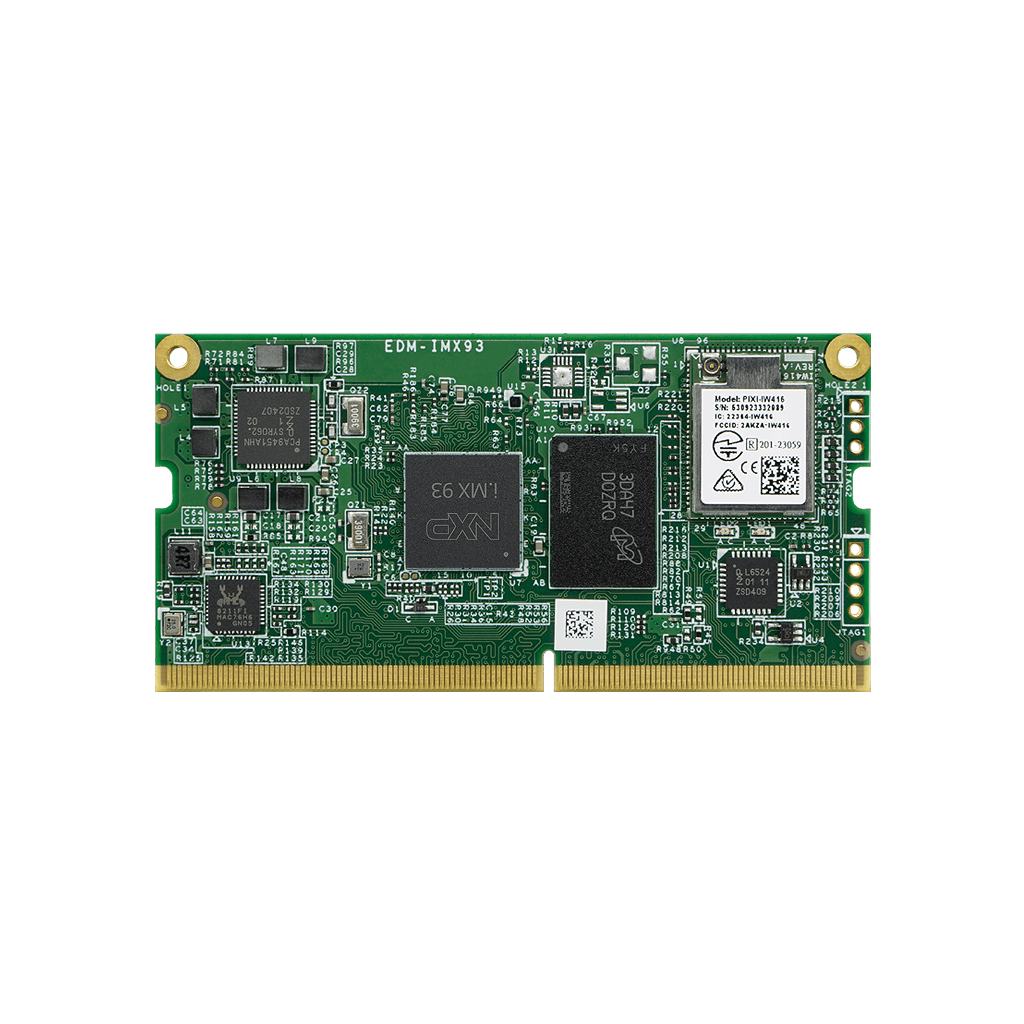

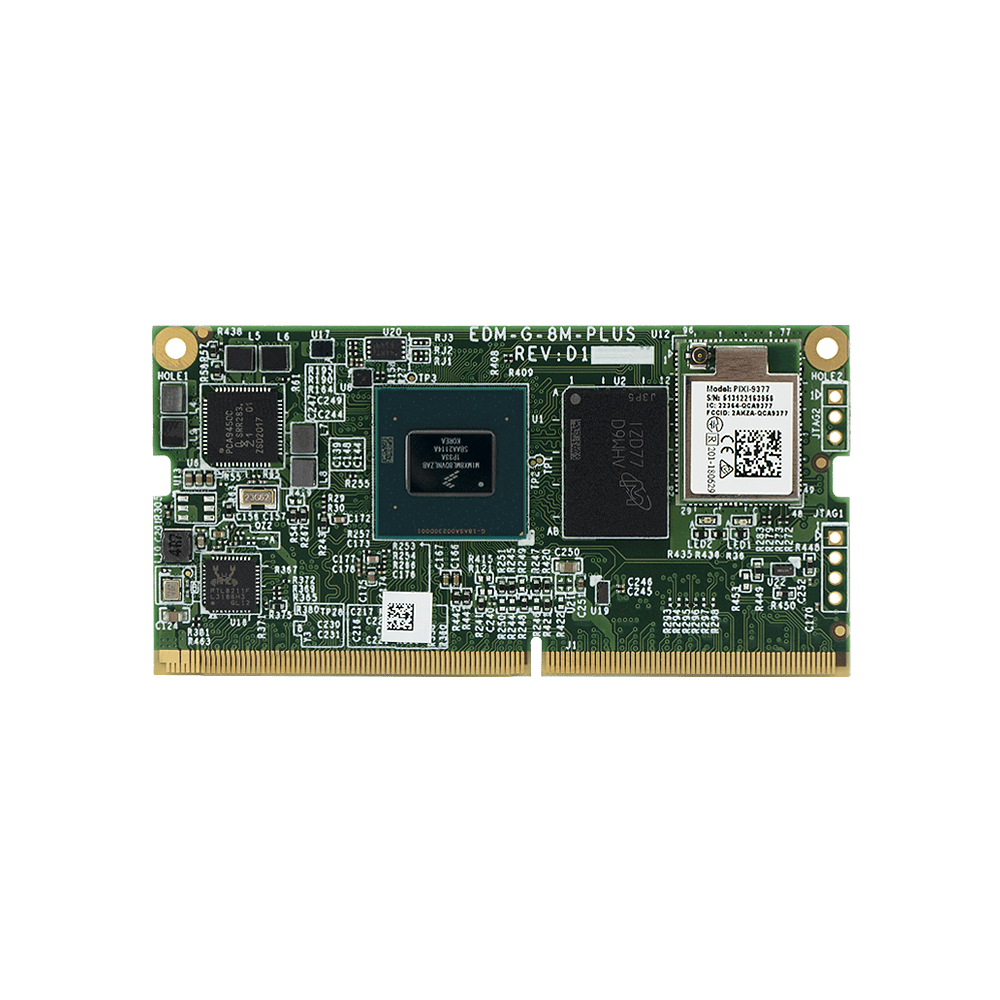

TechNexion’s embedded cameras can be integrated with external sensors like GPS, LiDAR, or IMU. In addition to embedded cameras, our system on modules like EDM-IMX95 come with enough processing power to handle data from multiple sensors. It also has the right I/O options to make the integration process easier. To learn more about how our embedded camera and processing solutions can elevate the performance of your robotic systems, talk to our experts today.

Related Products

Get a Quote

Fill out the details below and one of our representatives will contact you shortly.