The history of cameras dates back to the early 19th century. The first permanent photograph was produced in 1827 by Joseph Nicéphore Niépce. Since then, we have come a long way in camera technology. From dry plates to photographic film and digital cameras, the quality of the image output kept improving over the years.

But nothing much has been discussed regarding the evolution of camera technology with respect to embedded vision. This is mostly because embedded vision as such is a relatively new idea for the world. In the industrial and commercial space, machine vision dominated for almost 2 decades. However, owing to benefits such as compactness and the ability to process data on the edge, embedded vision is taking over many applications.

In this context, we will look at the evolution of camera technology starting from the 19th century to the embedded vision era of today.

The Early Days of Camera Technology

As mentioned, the early developments of camera technology go back to the 19th century. Niépce’s partner Louis-Jacques-Mandé Daguerre continued his research in photography. He further refined the heliographic process invented by Niépce and called it Daguerreotype. Around the same time, English scientist William Henry Fox Talbot developed another type of camera (which he called Calotypes) and was behind releasing the first documented publication on photography in 1839.

The next 50 years saw different variations of the two types of cameras (Daguerreotypes and Calotypes) along with the introduction of lenses. This period also saw the development of dry and wet plates in photography. With the arrival of the 1880s, there was widespread adoption of camera films as George Eastman started manufacturing. It was in 1888 that he made his first camera available for sale.

The early 20th century saw further developments in camera technology with Kodak entering the market in 1934. Another revolution that happened in space was the development of TLRs (Twin-lens Reflex Cameras) and SLRs (Single-lens Reflex Cameras) in the 1920s.

The Era of Digital Cameras

One of the biggest revolutions (if not the biggest) in camera technology was the invention of semiconductor image sensors by Willard S. Boyle and George E. Smith at Bell Labs in 1969. This gave birth to digital cameras. Later in 1975, the first self-contained digital camera was developed by Steven Sasson, an engineer at Kodak.

In the coming years, digital cameras reached millions of consumers with companies like Canon and Nikon manufacturing lightweight and compact cameras. The 1980s witnessed many new advancements in the field – the introduction of DSLR cameras being the most notable among them. Digital cameras became so mainstream that film cameras were no more in demand (we all know what happened to Kodak as they continued to stick to film cameras).

CCD and CMOS Image Sensors

The first digital camera sensors were CCDs (Charge Coupled Devices). Though the roots of CMOS (Complementary Metal-Oxide Semiconductor) sensors can go back to the 1960s, the CMOS active-pixel sensor was developed by Eric Fossum’s team at the NASA Jet Propulsion Laboratory in 1993.

CCD and CMOS image sensors have co-existed since their invention. While CCD sensors offer better sensitivity and output, many modern-day CMOS sensors are almost as good. Given that CMOS sensors are more compact and less expensive, they have seen wider adoption in mobile phones, machine vision, and embedded vision applications.

Mobile Phone Cameras

The history of camera technology will not be complete without discussing mobile phone cameras.

After the launch of the first camera phone in 1999 that came with only 110,000 pixels, today we have smartphone cameras containing CMOS sensors of more than 48 megapixels, nearly 500x that of the first camera phone.

Use of Cameras in Machine Vision

Being a company that has been offering processing solutions for embedded vision applications for 2 decades now, TechNexion has been fortunate enough to observe how the space has evolved.

The early days of machine vision, in the 1990s and early 2000s, involved using CCD sensors for low-end applications like surveillance & security.

After 2010, with high-quality, low-cost CMOS sensors available in the market, more sophisticated applications like factory automation saw extensive use of cameras for capturing images and videos to automate key manufacturing processes. This revolution was fuelled by the proliferation of smartphones that was characterized by rapid improvements in the quality of cameras, as well as integrated processing capabilities for vision applications.

We’ve been in the camera space for at most 2 years, not 20. However, we’ve been building embedded processing products that mate with cameras for over 2 decades.

Role of Computers in Enhancing Machine Vision Cameras

We all need to understand that cameras are not a standalone technology. The changes happening in space are dependent on various other domains – computing being one of the most important.

Machine vision systems typically rely on PCs to process the image and video data captured by cameras. As in resolution and frame rate increase, the amount of data to be transmitted in a given time also increases. Hence, when camera sensors became more advanced, the supporting computing technology also had to improve simultaneously. This is one of the reasons why developments in camera systems are often backed by technological advancements in the computing space.

Modern machine vision systems that monitor fast-moving conveyor belts and manufacturing setups should have high frame rates in order to eliminate blurring, which in turn demands a high-performance computer for image processing.

Embedded Vision and Camera Technology

Even though embedded vision is a late entrant into the camera industry, given that cameras form the foundational block of embedded vision systems, its roots could be drawn back to the early days of camera technology. In this section, we will understand how embedded vision has evolved over the years and the role of camera technology, and related technologies in its development.

Embedded Vision and Processors

The evolution of embedded cameras and processors is closely coupled. Since embedded vision systems use an on-device processor (rather than external PCs) for image and video processing, processors also needed to have adequate performance capabilities to serve the needs of modern-day embedded vision applications.

High-end processors like NVIDIA Jetson offer up to 275 TOPS of AI performance. This has made it possible to deploy embedded cameras into even the most data hungry applications such as sports analytics, robotics, smart traffic systems, etc. Though most AI-based embedded vision devices can operate with 8 TOPS or lower, high-performance processors facilitate image and video capture without having to worry about processing capacity.

Advancements in Embedded Cameras

Given that sensors are the most important component of an embedded camera, high-quality sensors from companies like onsemi and Sony are encouraging product developers to come up with newer use cases. Examples of some of the improvements made to modern sensors include:

- BSI (Back Side Illuminated) sensors

- NIR (Near InfraRed) sensors

- RGB IR sensors

- Low light sensors

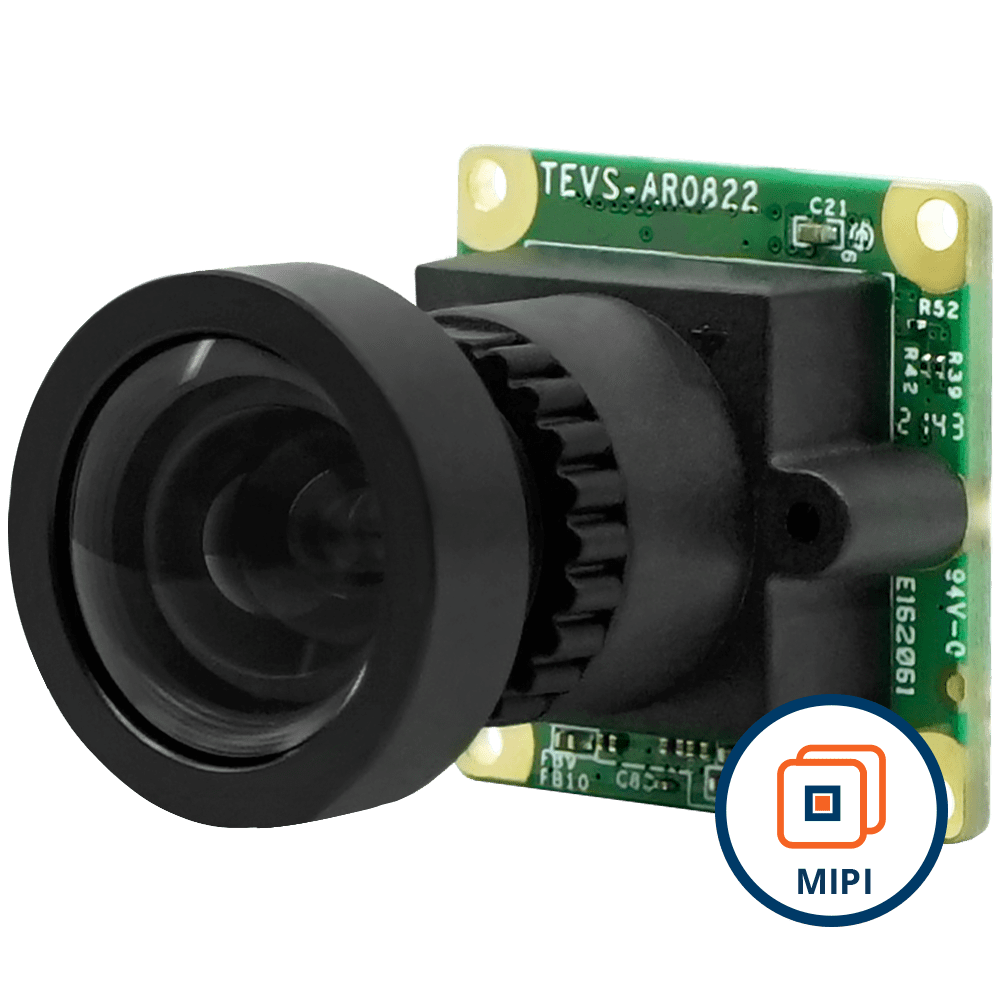

The AR0822 sensor from onsemi is an example of a high-resolution NIR sensor that also comes with excellent night vision capabilities.

Some of the other significant changes that led to the overall advancement of embedded cameras are:

- Improvements in lenses: Various types of lenses include wide-angle lenses, autofocus lenses, and fixed-focus lenses.

- Advancements in ISPs (Image Signal Processors): Internal can retain the fidelity of the scene captured while offloading many signal processing tasks from the host processor.

- Developments in interfaces and cables: Camera interfaces like MIPI CSI-2 and USB 3.1 made high bandwidth transmission possible while FPD-Link and GMSL technologies transport the lowest-latency, highest quality image data with high throughput over long distances (up to 15 meters).

- Changes in other camera accessories like IR-cut filters and illumination methods.

Today, embedded vision is no longer a buzzword. It finds applications in a wide variety of industries such as medical, smart city, industrial, and agriculture… With the advent of AI, ML-based models are deployed to analyze the images and videos captured using embedded cameras for object recognition, people counting, number plate recognition, facial recognition, cell & molecular analysis, and more.

In addition to these changes, camera engineers are working hard every day to further optimize firmware and camera software to make sure embedded vision system manufacturers get the best out of the camera modules they use.

FPD-Link III Aluminium Enclosed Camera with onsemi AR0822 8MP 4K Rolling Shutter with Onboard ISP + IR-Cut Filter with C Mount Body

VLS-FPD3-AR0822-CB

- onsemi AR0822 8MP Rolling Shutter Sensor

- 4K HDR Imaging Capabilities

- Near Infra-Red Enhancement for Outdoor Applications

- Designed for Low Light Applications

- C-Mount for Interchangeable Lenses

- FAKRA Z-Code Automotive Connector

- Plug & Play with Linux OS & Yocto

- VizionViewer™ configuration utility

- VizionSDK for custom development

| Sensor | onsemi AR0822 |

| Shutter | Rolling |

| Megapixels | 8MP |

| Chromaticity | Color, Monochrome |

| Interface | FPD-Link III |

The Future of Camera Technology and Embedded Vision

The future of camera technology in embedded vision is bright.

Sensors are getting better every day. Newer interface standards are being developed (we didn’t have an FPD-Link III or GMSL3 15 years ago). Lenses are becoming more efficient. ISPs are improving in terms of their ability to process color and HDR images at higher resolutions and frame rates.

These changes happen over the natural course of time.

What is that one thing that is going to revolutionize the way we take images and videos in industrial and commercial settings?

It is nothing but artificial intelligence.

But isn’t the AI revolution already here?

In a way, yes. Many companies are leveraging AI-based image and video analysis to derive actionable insights from the data cameras capture. Examples of embedded vision applications where AI-based processing is used include:

- Robotics

- Sports analytics

- Smart traffic monitoring

- Autonomous shopping

- Automated industrial vehicles (tractors, forklifts, mining vehicles)

- Patient monitoring

However, in embedded vision, AI is still in its early days. It hasn’t become mainstream yet like a ChatGPT for example. At the same time, the adoption of AI is fast increasing. Here are some use cases where AI is likely to find its way in the future (or AI is currently in the experimentation phase):

- Blood cell analysis and related diagnosis.

- Image-guided eye diagnosis.

- Animal husbandry (similar to how AI is used in vegetation and crop monitoring today).

- Insurance and driver safety (using a combination of telematics and in-vehicle camera surveillance) in ADAS (Advanced Driver Assistance Systems).

In addition to newer applications, with AI algorithms becoming more accurate and capable, embedded vision systems like robots, drones, and smart traffic devices will become more advanced. They will be able to solve more complex issues which are not possible today. An example of this is accurately performing facial recognition of passengers in a moving vehicle, which today comes with way too many challenges.

TechNexion: Pioneering Embedded Vision for Two Decades

TechNexion has been in the embedded vision space for 20 years now – with solutions spanning 4K cameras, NIR cameras, global shutter cameras, etc., supporting interfaces such as USB 3.0, MIPI CSI-2, and FPD-Link III. Please view our embedded vision solutions here.

Related Products

- The Early Days of Camera Technology

- The Era of Digital Cameras

- CCD and CMOS Image Sensors

- Mobile Phone Cameras

- Use of Cameras in Machine Vision

- Role of Computers in Enhancing Machine Vision Cameras

- Embedded Vision and Camera Technology

- Embedded Vision and Processors

- Advancements in Embedded Cameras

- The Future of Camera Technology and Embedded Vision

- TechNexion: Pioneering Embedded Vision for Two Decades

- Related Products

Get a Quote

Fill out the details below and one of our representatives will contact you shortly.