In 1966, the Stanford Research Institute’s Shakey the Robot became the first mobile robot with a single-lens TV camera for its operations. This rudimentary system allowed Shakey to “see” its environment, identify basic shapes, and navigate simple spaces. It marked a breakthrough in early robotics, despite the system’s limited ability to interpret complex surroundings.

Fast forward to today, and we have robots like Boston Dynamics’ Atlas, equipped with advanced multi-camera vision and sensor-fusion systems that enable dynamic object tracking, real-time obstacle avoidance, and intricate human-like movements.

Vision is vital for robotic autonomy, enabling machines to navigate complex environments, identify objects, and interact with precision. It forms the foundation for critical functions like obstacle avoidance, task execution, and spatial awareness. Advanced vision systems enhance a robot’s ability to make informed decisions, adapt to dynamic conditions, and perform tasks with greater accuracy and efficiency.

This blog post takes a look at the evolution of camera technology in robotics, from simple sensors to sophisticated multi-camera systems.

Monocular Cameras: The Foundation of Robotic Vision

Monocular cameras are single-lens cameras that capture 2D images, serving as the initial building blocks of robotic vision. These cameras mimic human sight in capturing visual data but lack depth perception, offering only flat representations of the environment. Despite this limitation, they provide essential visual input that robots can analyze for various tasks.

Applications of Monocular Cameras

Early robots equipped with monocular cameras relied on their simple yet effective capabilities for tasks like:

- Object Recognition: Identifying and classifying objects in controlled environments.

- Simple Navigation: Using visual cues to follow paths or avoid obstacles.

- Barcode Reading: Scanning and interpreting barcodes or QR codes in manufacturing and logistics settings.

These applications demonstrated the utility of monocular cameras in streamlining industrial processes, albeit in restricted scenarios.

Limitations

Despite their contributions, monocular cameras had notable drawbacks:

- No Depth Perception: They could not gauge distances, making spatial understanding challenging.

- Reliance on Estimation Methods: Techniques like Structure from Motion (SfM) were required to infer 3D information from 2D images, adding computational complexity and limiting real-time performance.

While monocular cameras set the stage for robotic vision, their constraints highlighted the need for more advanced technologies capable of providing richer environmental understanding.

Stereo Vision: Introducing Depth Perception

Autonomous robots in a retail warehouse

Stereo vision revolutionized robotic vision by introducing depth perception. Using two cameras positioned at slightly different angles, this technology mimics human binocular vision. It calculates depth by analyzing disparities – differences in the position of objects captured in the two images. The result is a detailed 3D representation of the environment, allowing robots to perceive spatial relationships effectively.

*Note: Stereo cameras and 3D cameras both provide depth perception, but they do so in different ways. Stereo cameras use two lenses to capture 2D images and calculate depth through disparity, mimicking human vision. 3D cameras (explained later) combine RGB images with depth data using technologies like ToF, structured light, or LiDAR, offering more precise and direct depth measurements.

Applications of Stereo Vision

Stereo vision found widespread use in various robotic functions, including:

- Obstacle Avoidance: Robots can identify and navigate around physical barriers with precision.

- Robotic Grasping: Improved depth understanding aids in accurate manipulation of objects.

- 3D Modeling: Generating 3D maps or models of environments for tasks like inspection or surveillance.

Challenges

Despite its benefits, stereo vision faces certain limitations:

- Calibration Complexity: Aligning the dual cameras for accurate disparity calculation is a technical challenge.

- Limitations in Adverse Conditions: Stereo vision struggles in low-light environments or when viewing surfaces lacking texture, which are essential for identifying disparities.

While stereo vision addressed many gaps in robotic visual capability, these challenges prompted further advancements to overcome environmental and operational constraints.

3D Depth-sensing Cameras: A New Dimension

3D depth-sensing cameras have expanded robotic vision capabilities, allowing machines to perceive and interpret the world in three dimensions. These include RGB-D cameras, and Time-of-Flight (ToF) cameras, each designed to address unique challenges in robotic perception.

RGB-D Cameras

RGB-D cameras combine traditional RGB cameras with a depth sensor. This allows for the capture of both color and depth information simultaneously, providing valuable data for robotic perception.

Advantages of RGB-D Cameras

- Cost-effective: Compared to using two distinct cameras for capturing RGB data and depth, RGB-D cameras are relatively affordable and accessible.

- Compactness: Since both RGB and depth sensors are integrated into a single camera system, RGB-D cameras tend to be more compact than distinct cameras.

- Ease of Integration: RGB-D cameras typically come with the algorithm to combine RGB and depth data, which reduces the integration effort.

Applications of RGB-D Cameras

- Robotic Mapping: Robots use RGB-D cameras to create detailed 3D maps of environments for navigation. For example, robotic vacuums map rooms to optimize cleaning paths.

- Motion Tracking: These cameras track human movements in real time, a feature crucial for gaming consoles like the Kinect and for robotic systems engaging with humans.

- Human-robot Interaction: They facilitate gesture recognition, enabling robots to interpret and respond to non-verbal commands.

Time-of-Flight (ToF) Cameras

ToF cameras work on the principle of light travel time, measuring the distance between the camera and an object by calculating how long it takes for light to bounce off the surface and return to the sensor. This technology allows for accurate depth sensing in various lighting conditions, making them useful for outdoor applications as well.

Advantages of ToF Cameras

- High Precision: ToF cameras offer highly accurate depth measurements, even in real-time scenarios.

- Lighting Flexibility: They perform well across various lighting conditions, including dim or artificial light.

Applications of ToF Cameras

- Autonomous Vehicles: ToF cameras enhance vehicle perception, enabling obstacle detection and route optimization in self-driving cars.

- Industrial Robotics: These cameras improve efficiency in applications like object sorting and precision assembly by providing accurate spatial data.

- Security Systems: ToF technology enhances facial recognition systems by adding depth information to traditional 2D imaging.

LiDAR

LiDAR (Light Detection and Ranging) is a method for determining ranges by targeting an object or a surface with a laser and measuring the time for the reflected light to return to the receiver. These are highly accurate and can create detailed maps with high resolution. One of the main advantages of LiDAR is their ability to operate in low light conditions, as they emit their own light source. This makes them ideal for use in outdoor or nighttime environments.

Advantages of LiDAR

- Exceptional Accuracy: LiDAR produces detailed and precise 3D maps, making it a benchmark in depth sensing.

- Long-range Capabilities: It can capture data from hundreds of meters away, even in low-light conditions.

- Robustness: LiDAR performs well in various weather conditions, from foggy terrains to bright sunlight.

Applications of LiDAR

- Outdoor Robotics: Robots deployed in rugged terrains, such as mining or agricultural equipment, use LiDAR for navigation and task execution.

- Autonomous Navigation: LiDAR is a cornerstone technology in self-driving cars, helping them detect and respond to obstacles, pedestrians, and road signs.

- Surveying and Inspection: The construction industry leverages LiDAR for land mapping and infrastructure analysis, ensuring accuracy in large-scale projects.

3D depth-sensing cameras have transformed robotic vision. They provide robots with the ability to perceive and interact with their environment in three dimensions. These cameras paved the way for advancements in navigation, manipulation, and collaboration.

Event-based Cameras: Faster and More Efficient Vision

Event-based cameras represent a leap forward in vision technology, offering a radically different approach to capturing dynamic environments. Unlike traditional cameras, which capture entire frames at a fixed rate, event-based cameras detect and record changes in the scene as they happen. This method of imaging, often referred to as neuromorphic vision, is inspired by the way biological eyes process visual information, making it particularly well-suited for fast-moving and high-speed robotic systems.

How They Work

Event-based cameras do not rely on frame-based image acquisition. Instead, each pixel in an event-based camera operates independently, capturing changes in light intensity in real-time. When a pixel detects a significant change, such as a motion or sudden variation in brightness, it triggers an event and records the specific time of that change. The result is a continuous stream of data, where each event is timestamped with microseconds precision, allowing for real-time processing of dynamic scenes.

Advantages of Event-based Cameras

- High Temporal Resolution: Traditional cameras operate at fixed frame rates (e.g., 30 FPS, 60 FPS), but event-based cameras respond to changes on the microsecond scale, capturing extremely fast motions that would otherwise be missed.

- Low Power Consumption: Since event-based cameras only process changes in the scene, they use significantly less power than conventional cameras that are continuously processing frames.

- Reduced Data Redundancy: Unlike traditional cameras, which continuously capture complete frames, event-based systems reduce the amount of data that needs to be transmitted or processed. They only capture changes in a scene, leading to greater efficiency in high-speed environments.

Applications of Event-based Cameras

- High-Speed Robots: They are especially useful in tasks requiring high-speed object detection or manipulation, such as in industrial robotics or assembly lines.

- Drones: For drones flying at high speeds or navigating through complex, obstacle-laden environments, event-based cameras provide the ability to detect and avoid obstacles quickly and efficiently.

- Fast-Reaction Applications: Event-based cameras are employed in sports analytics, scientific research, and military applications, where the ability to capture rapid movements or events in high detail is crucial.

Event-based cameras offer a revolutionary approach to robotic vision. They provide high-speed systems with the ability to process real-time changes efficiently and accurately.

Multi-Camera and Multi-Sensor Fusion

The evolution of robotics relies not only on individual camera systems but also on the ability to combine multiple cameras and sensors for enhanced perception. Multi-camera systems and sensor fusion technologies allow robots to process diverse data types and make better-informed decisions in dynamic environments.

Multi-Camera Systems: Enhancing Perception

Multi-camera systems integrate different types of cameras, such as monocular and stereo, to improve both visual coverage and depth perception. Monocular cameras capture broad 2D visual information, while stereo cameras, by comparing images from two lenses, calculate depth, enabling robots to understand distances and navigate with precision. Combining these systems provides enhanced object detection, obstacle avoidance, and even tasks like robotic grasping.

Sensor Fusion: Integrating LiDAR, Radar, and Infrared

While cameras provide valuable visual data, they are often limited by factors like lighting and visibility. Sensor fusion combines data from cameras with other sensors, such as LiDAR, radar, and infrared. By fusing data from multiple sensors, robots can have a more comprehensive understanding of their surroundings, making them better equipped to navigate complex environments and perform tasks with greater accuracy.

Applications

The fusion of multiple cameras and sensors is essential in applications like autonomous vehicles, where multi-camera systems enable the vehicle to perceive its surroundings accurately, even in complex environments. In industrial robotics, it allows robots to perform tasks with greater precision, such as assembly or quality control. For agricultural robotics, sensor fusion helps optimize crop monitoring and harvesting.

These are just a few examples of how sensor fusion plays a crucial role in various industries and applications. In short, multi-camera and multi-sensor fusion significantly enhance robotic perception, improving performance and safety across various industries.

An agricultural robot using multiple cameras

Emerging Technologies and Future Trends

As camera technology in robotics continues to evolve, several emerging technologies promise to enhance robotic vision in unprecedented ways.

Polarization Cameras

Polarization cameras use the concept of light polarization to capture images that reveal material properties and surface characteristics. These cameras are invaluable for tasks like material identification, where traditional cameras struggle, and underwater vision, where light scattering can impair visibility.

Hyperspectral Cameras

Hyperspectral cameras capture a wide range of wavelengths across the electromagnetic spectrum, beyond the visible light range. This allows robots to gather detailed spectral information, which is crucial for precision tasks such as remote sensing, agricultural monitoring, and industrial quality control. These cameras provide insights into the composition and health of objects or environments, enabling robots to perform with remarkable accuracy.

Neural Network-Powered Vision Systems

Neural network-powered vision systems combine artificial intelligence with imaging technologies to improve object detection and scene interpretation. By using deep learning algorithms, these systems can adapt to new environments and tasks with minimal hardware changes. The AI-driven approach enhances the robot’s ability to interpret complex, dynamic scenes, making vision systems more versatile and efficient.

Quantum Imaging

Quantum Imaging is an emerging frontier that uses quantum properties of light to achieve clarity and sensitivity beyond the capabilities of conventional cameras. While still speculative, it holds the potential for robotics in environments where precision is crucial, such as medical imaging or deep-space exploration.

These innovations signal the future of robotic vision, promising more intelligent, adaptive, and efficient robots across various fields.

Challenges and Considerations

As robotic vision technologies evolve, several key challenges must be addressed for optimal performance and broader adoption.

Environmental Challenges

Robots often operate in variable lighting conditions, weather extremes, and complex environments. Cameras can struggle in low light, fog, or heavy rain, which may reduce accuracy and reliability. Advanced sensors like LiDAR or infrared cameras are being used to address these limitations, helping improve performance in diverse environments.

Processing Requirements

High-resolution cameras generate vast amounts of data that need to be processed in real-time for accurate decision-making. This requires significant computational power, which can strain onboard processors. Balancing high-quality data with the need for real-time processing is crucial for robotic autonomy. Efficient algorithms and specialized processing units, such as GPUs, are critical for this.

Cost and Scalability

Advanced vision technologies, such as LiDAR and multi-camera systems, can be expensive, posing challenges for scaling robots, particularly in industries with large fleets or commercial applications. Manufacturers face the challenge of balancing cutting-edge capabilities with cost-efficiency to make these systems accessible for widespread use.

Wrapping Up

The evolution of robotic vision, from basic monocular cameras to advanced systems like RGB-D, Time-of-Flight (ToF), and multi-sensor fusion, has revolutionized how robots perceive and interact with the world. These advancements have significantly enhanced robotic autonomy, precision, and safety.

As technology continues to progress, future innovations like polarization and quantum imaging promise even greater capabilities, further expanding the potential for robots across various industries. With these developments, robots will achieve more accurate, efficient, and adaptable vision, transforming industries like healthcare, automotive, manufacturing, and more.

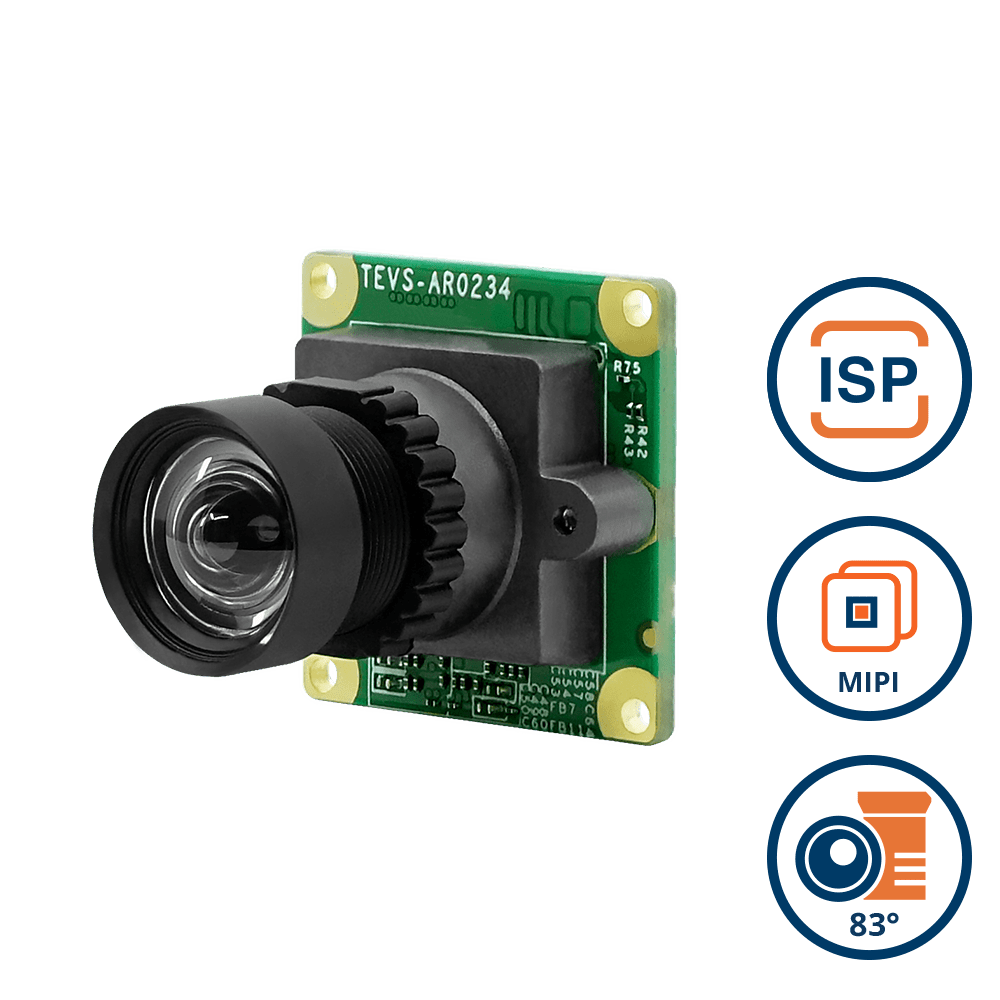

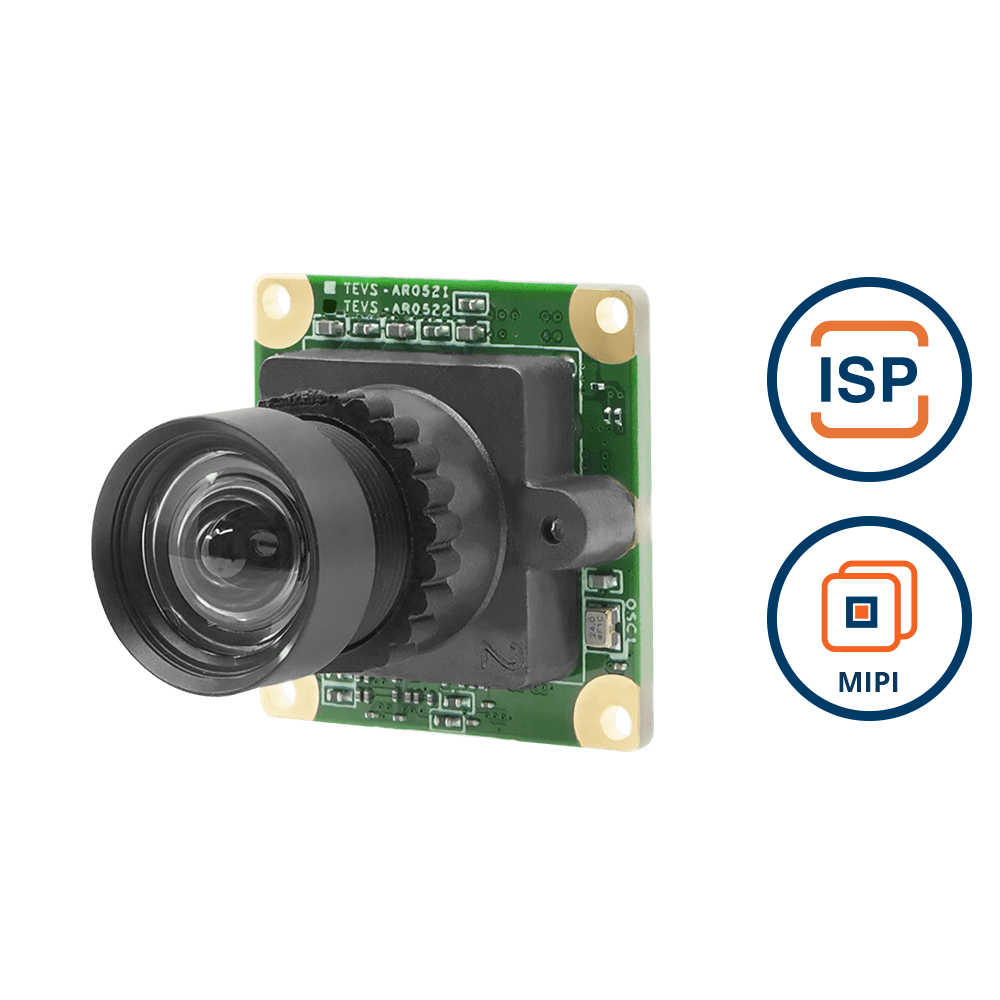

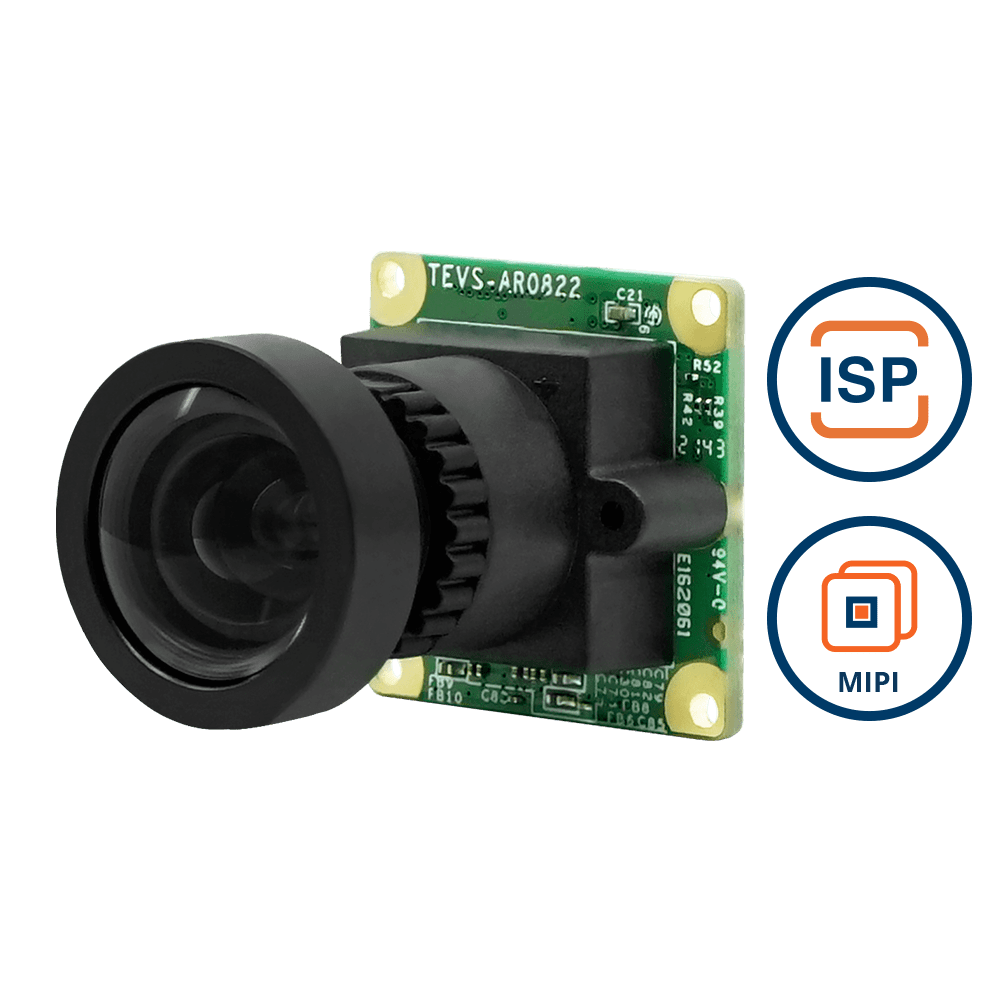

TechNexion offers a wide range of cutting-edge embedded vision cameras designed for diverse robotic applications. With features like high resolution, global shutter, and near infrared sensitivity, TechNexion’s cameras are built to meet the demands of the most challenging environments. Explore how our solutions can enhance your robotic systems today! Contact us to learn more.

Related Products

- Monocular Cameras: The Foundation of Robotic Vision

- Stereo Vision: Introducing Depth Perception

- Applications of Stereo Vision

- Challenges

- 3D Depth-sensing Cameras: A New Dimension

- Event-based Cameras: Faster and More Efficient Vision

- Multi-Camera and Multi-Sensor Fusion

- Emerging Technologies and Future Trends

- Challenges and Considerations

- Wrapping Up

- Related Products

Get a Quote

Fill out the details below and one of our representatives will contact you shortly.