Robotics has evolved significantly, with control algorithms playing a fundamental role in this progression. These algorithms serve as the backbone of robotic systems, enabling machines to perform tasks with precision, adaptability, and efficiency. From industrial robots performing repetitive assembly-line tasks to autonomous vehicles navigating complex environments, control algorithms are indispensable.

One of the earliest and most widely used approaches is the PID (Proportional-Integral-Derivative) controller, which offers simplicity and effectiveness for many applications. However, with advancements in artificial intelligence and computational power, more sophisticated techniques like reinforcement learning have emerged, allowing robots to learn and adapt to dynamic, uncertain scenarios.

In this blog post, we’ll explore the fascinating evolution of control algorithms in robotics.

Fundamentals of Control Systems

An automated robotic arm

Control systems are the backbone of robotics, enabling machines to execute tasks with precision and adaptability. At their core, control algorithms process input data from sensors, compare it to the desired output, and compute necessary adjustments to achieve optimal performance. This process ensures that robots respond effectively to dynamic environments and varying conditions.

A control system typically consists of 3 key components: sensors (to measure environmental and system states), controllers (to process data and determine actions), and actuators (to execute the calculated movements). Together, these components form the framework for decision-making and motion control in robotics.

There are two main types of control: open-loop and closed-loop systems. Open-loop control operates without feedback, following predefined commands regardless of external changes. While simple and cost-effective, it lacks adaptability, making it suitable for predictable tasks like conveyor belt operations.

In contrast, closed-loop control incorporates feedback to adjust actions based on real-time data continuously. This makes it ideal for complex tasks like maintaining robotic arm stability or navigating autonomous vehicles.

Understanding these fundamentals is essential for designing robots that operate efficiently, adapt to uncertainties, and maintain consistent performance across diverse applications.

PID Controllers: The Foundation of Control Systems

The Proportional-Integral-Derivative (PID) controller is one of the most widely used feedback mechanisms in control systems. It operates by continuously monitoring the difference, or error, between a system’s desired state (setpoint) and its actual state. Using this error signal, the PID controller employs three distinct terms to adjust the system’s control input dynamically:

- Proportional (P): This term is directly proportional to the current error, providing immediate correction based on the magnitude of the deviation. However, relying solely on this term may result in steady-state error.

- Integral (I): By considering the accumulation of past errors over time, the integral term addresses steady-state errors and ensures long-term accuracy.

- Derivative (D): This term predicts future error behavior by analyzing the rate of change of the error, creating smoother and more stable adjustments.

When combined, these three terms enable the PID controller to provide robust, balanced control that maintains system stability while minimizing errors.

Applications of PID Controllers

PID controllers find widespread application in robotics and automation due to their versatility and reliability. For instance:

- Robotic Arms: They are used to ensure precise positioning and movement, allowing robotic arms to handle intricate assembly tasks.

- Drones: PID controllers stabilize flight dynamics by maintaining orientation and altitude, crucial for reliable performance.

- Motion Control: Industrial processes often use PID control to regulate motors, ensuring smooth and accurate movements in manufacturing systems.

Their ability to quickly respond to disturbances and maintain stability makes PID controllers an essential tool across numerous fields.

Limitations of PID Control

Despite their advantages, PID controllers have certain limitations. For non-linear, time-varying, or highly dynamic systems, PID control may struggle to adapt, leading to suboptimal performance or instability. This highlights the need for more advanced control techniques when dealing with complex environments or unpredictable conditions.

Model Predictive Control (MPC): Planning with Precision

Model Predictive Control (MPC) represents a significant advancement in control strategies compared to traditional methods like PID control. At its core, MPC operates by using a dynamic model of the system to predict future states over a defined time horizon. By solving an optimization problem at each time step, MPC determines the optimal control actions that guide the system toward desired outputs while adhering to specified constraints.

Advantages of MPC

One of the key advantages of MPC lies in its ability to handle multi-variable systems and enforce constraints on inputs and states. This capability makes it particularly suitable for applications where safety, efficiency, or operational boundaries are critical. Additionally, MPC’s predictive nature allows it to anticipate and mitigate potential disturbances or system deviations before they occur, offering superior precision and robustness.

Applications of MPC

MPC is widely used in advanced applications such as autonomous vehicles and mobile robotics. For instance, in self-driving cars, MPC ensures smooth trajectory planning while navigating complex environments, considering factors like obstacle avoidance, speed limits, and passenger comfort.

Similarly, in robotics, MPC excels in tasks requiring flexible motion planning and adaptation to dynamic surroundings. Its versatility and predictive capabilities position MPC as a vital tool in the evolution of modern control systems.

Limitations of MPC

Despite its numerous advantages, Model Predictive Control (MPC) is not without limitations. One of its primary challenges is the high computational demand associated with solving optimization problems in real-time.

Additionally, the performance of MPC heavily relies on the accuracy of the system model; any discrepancies between the model and the actual system can lead to suboptimal or even unstable control actions.

Adaptive Control: Learning in Real Time

Adaptive control enables robots to adjust their parameters dynamically in response to changing environments or uncertainties. Unlike fixed-parameter systems, adaptive control continuously learns and modifies its control laws, ensuring optimal performance even when conditions deviate from the initial assumptions. It’s particularly useful in situations where precise environmental models are unavailable or impractical.

Applications of Adaptive Control

Some common applications include:

Collaborative Robots (Cobots):

- Adjusting force and motion to safely interact with humans.

- Adapting to variations in task requirements, such as different payloads.

Aerial Robots:

- Compensating for wind disturbances during flight.

- Modifying control strategies for varied terrains or payload conditions.

Industrial Automation:

- Enhancing precision in tasks involving unpredictable variables, like temperature or material properties.

A Humanoid Cobot

Limitations

While adaptive control offers flexibility and improved performance, it comes with certain challenges:

- Computational Intensity: Real-time learning and adjustments require significant processing power, which may strain system resources.

- Robust Adaptation Mechanisms: Developing algorithms that adapt effectively without overcompensating or destabilizing the system is complex.

Reinforcement Learning: Robots That Learn by Doing

Reinforcement Learning (RL) is a machine learning approach where robots improve their performance through trial and error. An RL agent interacts with its environment, receiving rewards or penalties for actions, and learns optimal behaviors over time. Unlike traditional control systems, RL doesn’t rely on pre-defined models, making it ideal for complex or dynamic tasks.

Autonomous Car

Applications of Reinforcement Learning

- Autonomous Vehicles: Helps the vehicle to navigate traffic by optimizing decisions such as lane changes and braking.

- Humanoid Robots: Teaching robots to walk, balance, or manipulate objects through self-guided learning.

- Industrial Automation: Optimizes assembly line processes, like packing or sorting, with minimal human intervention.

Challenges With Reinforcement Learning

- Training Time: RL systems often require extensive training periods to achieve reliable results, especially in complex environments.

- Hardware Requirements: High computational power is necessary to handle large-scale simulations and data processing.

- Safety: Applying RL in real-world settings poses risks due to the trial-and-error nature of learning, which can lead to unsafe or inefficient behavior during the training phase.

Comparison of Control Algorithms

Control algorithms differ greatly in terms of complexity, suitability for tasks, and real-world application contexts.

PID Control is the simplest algorithm, easy to implement and highly effective for systems that require straightforward feedback, such as basic temperature control or simple robotics tasks. It works well in stable environments but struggles with non-linearity and large-scale or complex systems.

MPC offers more sophistication by predicting future states of a system. Its ability to optimize long-term performance makes it ideal for dynamic systems like robotic arms, but it requires high computational power, making it less efficient for resource-constrained applications.

Adaptive Control excels in environments with changing conditions. Its ability to modify control parameters in real-time makes it well-suited for collaborative robots, such as cobots, that interact with humans and other systems. However, it is computationally intensive and demands careful tuning to ensure stability in dynamic environments.

Reinforcement Learning is the most complex and flexible approach, enabling robots to improve performance through trial and error. This is ideal for tasks involving decision-making and exploration, like autonomous vehicles or humanoid robots. However, RL requires significant data and computing resources, as well as lengthy training periods.

Emerging Trends and Future Directions

The future of control algorithms in robotics is shifting toward hybrid approaches that combine traditional methods like PID or MPC with more advanced techniques like reinforcement learning (RL).

These hybrid models aim to leverage the strengths of both, providing the reliability and efficiency of classical methods while enabling the adaptability and learning capabilities of RL. This combination is particularly beneficial for complex systems that need to handle both predictable and dynamic environments, such as industrial robots or autonomous vehicles.

Another emerging trend is the integration of AI-driven control algorithms with edge computing. By processing data closer to the source, edge computing reduces latency and improves the responsiveness of robotic systems.

This is especially crucial for real-time applications, such as autonomous robots operating in unpredictable environments, where quick decision-making and fast adaptation are required. The fusion of AI with edge computing enhances the efficiency, scalability, and real-time capabilities of control algorithms, pushing the boundaries of what robots can achieve autonomously.

Also Read: Applications and advancements of AI in robotics

These developments promise to expand the range and efficiency of robotic systems, enabling more intelligent, responsive, and adaptable robots in various industries.

Wrapping Up

Control algorithms are essential for the functionality and adaptability of robotic systems. From the simplicity of PID to the advanced learning capabilities of reinforcement learning, each algorithm offers unique strengths for different applications. As technology progresses, hybrid approaches and AI-driven innovations promise to further enhance robotic performance, making systems more intelligent, efficient, and capable of handling complex, real-world tasks. The future of robotics lies in the continuous evolution of these control strategies.

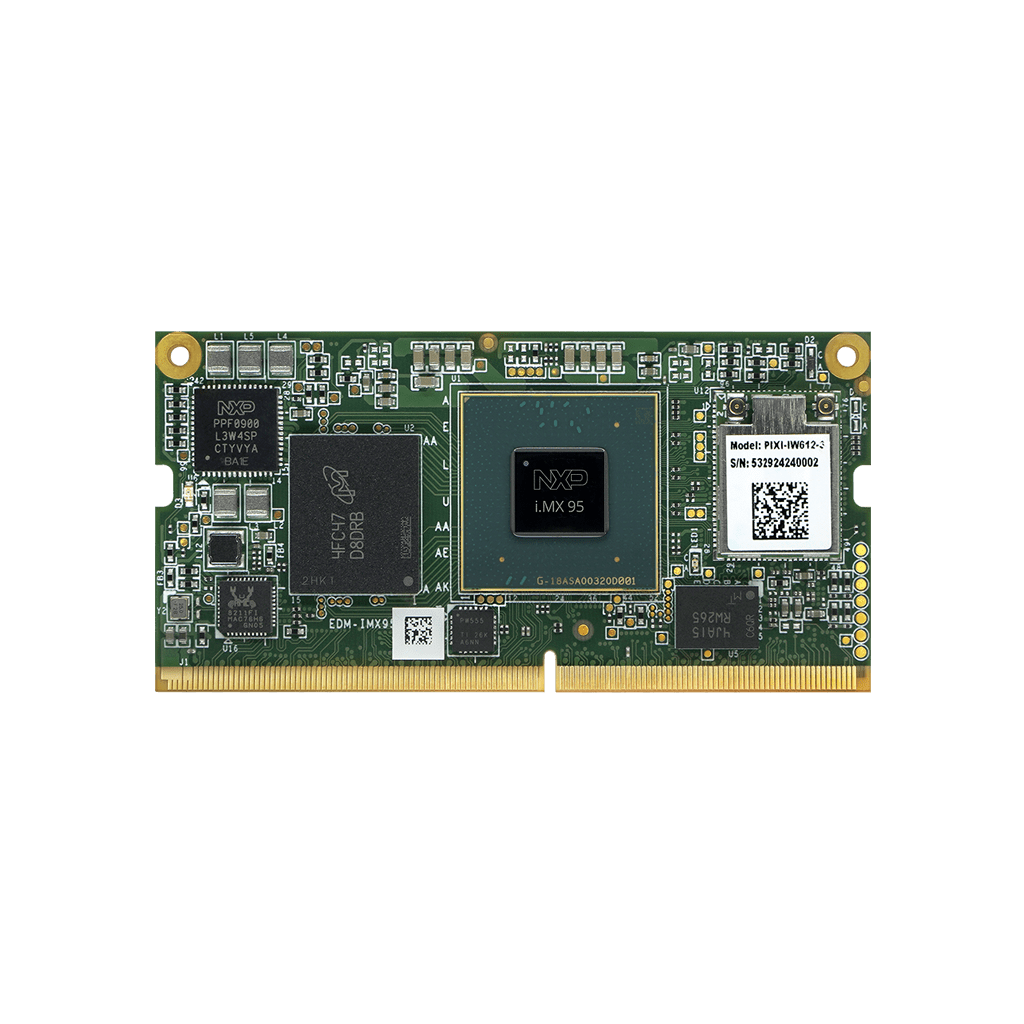

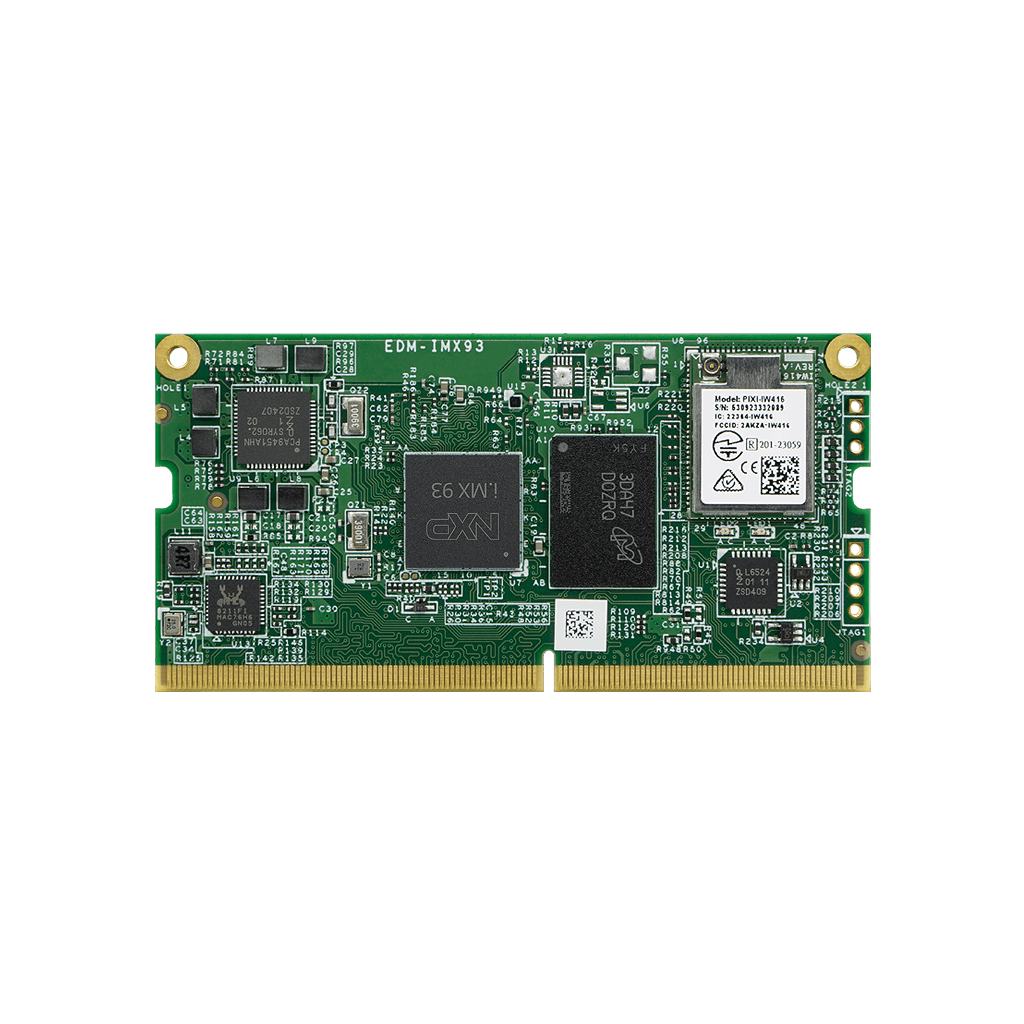

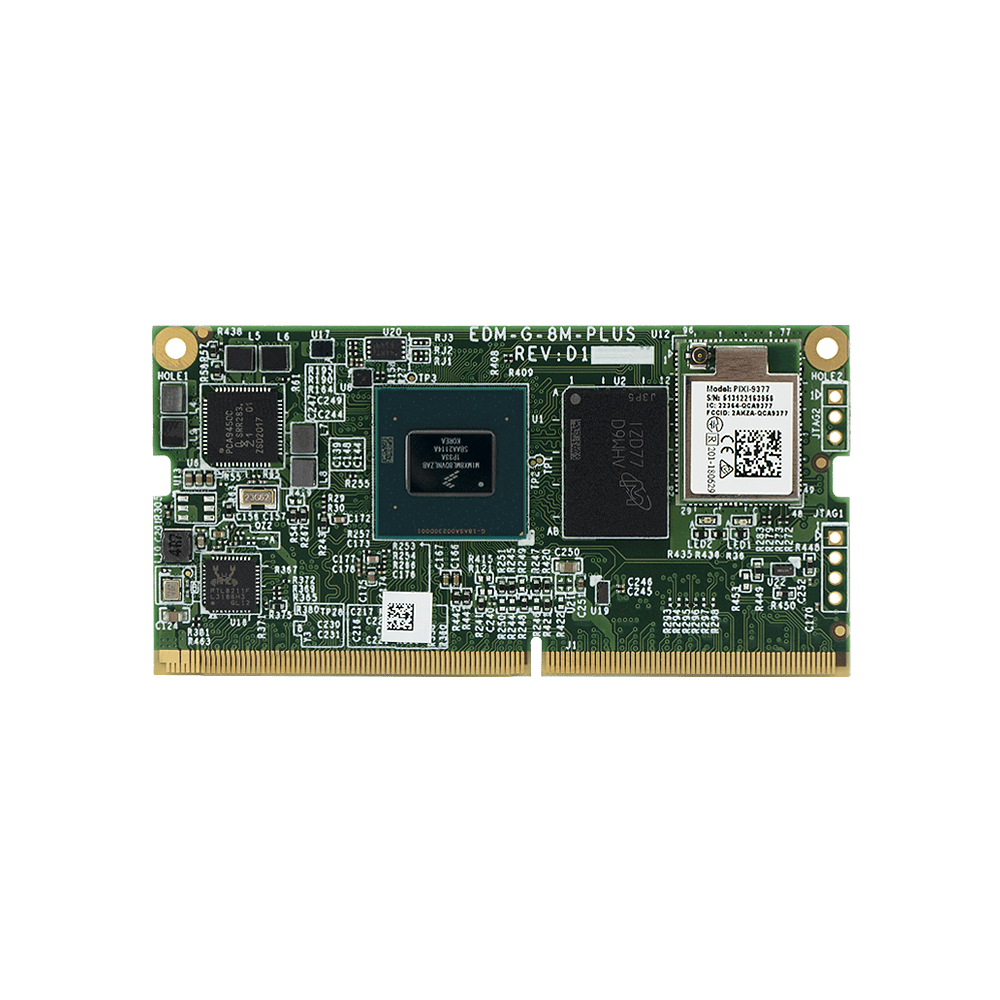

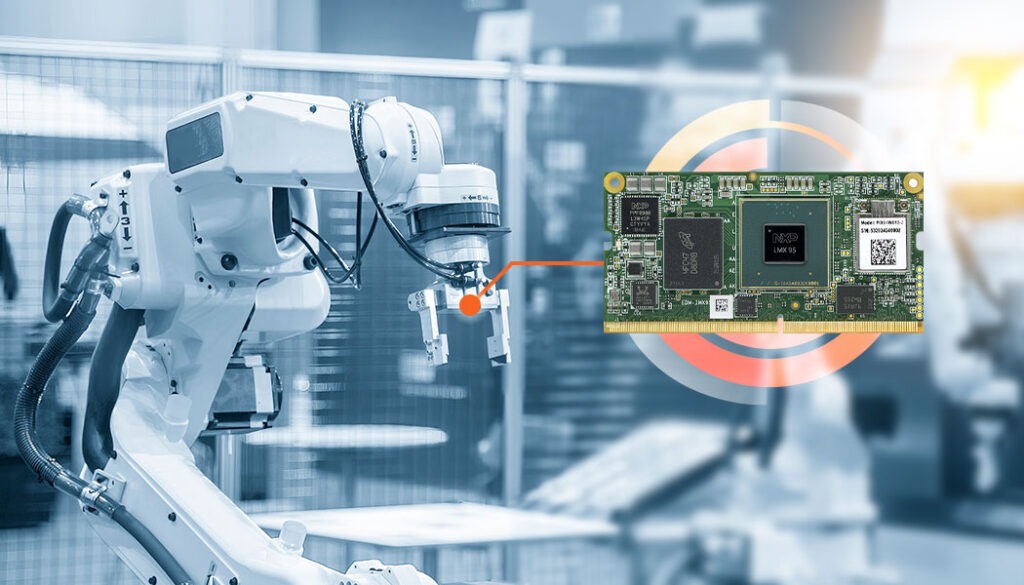

TechNexion - making vision and high-end processing possible

TechNexion has been in the embedded systems space for more than 2 decades now. With a diverse product portfolio including embedded vision cameras and system on modules, TechNexion can help with the vision as well as processing needs of your robots and autonomous vehicles. With new-age system on modules like the EDM-IMX95, robotics companies can reduce their development time and accelerate time to market.

Related Products

- Fundamentals of Control Systems

- PID Controllers: The Foundation of Control Systems

- Model Predictive Control (MPC): Planning with Precision

- Adaptive Control: Learning in Real Time

- Reinforcement Learning: Robots That Learn by Doing

- Comparison of Control Algorithms

- Emerging Trends and Future Directions

- Wrapping Up

- TechNexion - making vision and high-end processing possible

- Related Products

Get a Quote

Fill out the details below and one of our representatives will contact you shortly.