With the introduction of vision-guided robotics, robotics has undergone a profound transformation in recent years. At the core of this revolution are cameras, new-age processors, and advanced computer vision algorithms, giving robotics the ability for visual perception. The capacity of robots to “see” and interpret the surrounding environment has enabled them to communicate with humans and objects around them in unimaginable ways. This article investigates the profound impact of cameras on robotics and how they are transforming the field.

The days of robots blindly following instructions or using external sensors to complete jobs are over. Game-changing cameras have arrived, giving machines access to real-time information. Advanced image processing algorithms allow robots to analyze visual input, identify objects, and make decisions based on what they see.

One of its main benefits is the potential of vision-guided robots to improve perception. Robots can recognize minute features, subtle changes, and variances in their surroundings, thanks to cameras that record high-resolution images and videos. Robots can maneuver difficult terrain, recognize and avoid obstacles, and precisely control items with the help of depth cameras, making them truly autonomous.

Role of a Camera in Perception Enhancement

Cameras are crucial in the field of vision-guided robotics for improving perception skills. Robots can learn much about their surroundings from the visual data that camera sensors collect. Robots can derive valuable insights from this data using various image processing methods, enabling them to see, comprehend, and interact with their environment in greater depth. Let’s examine the main factors that enhance robotic perception.

- Cameras act as robots’ eyes, collecting high-resolution photos or video feeds of their surroundings. These cameras can range from standard RGB cameras to specialized variants like NIR or depth cameras. By capturing visual data, cameras provide robots access to the same visual information humans use for perception.

- Once the visual data has been captured, robots use image processing techniques to extract useful information from the captured images. These techniques include image segmentation, image filtering, edge detection, and feature extraction. By employing these algorithms, robots can recognize and locate pertinent objects or areas of interest in images, enabling further analysis and awareness.

- Robots can identify and track objects in their surroundings using cameras. Robots use computer vision algorithms to identify and locate objects based on their size, form, color, texture, or other distinguishing characteristics. Popular object detection algorithms, such as YOLO (You Only Look Once) or Faster R-CNN (Region Convolutional Neural Network), enable robots to detect and identify multiple objects simultaneously. Robots can derive useful information for interaction and manipulation from the tracking data gathered by object tracking cameras.

Robots can develop depth perception and create a 3D map of their surroundings with the help of stereo vision cameras, which consist of two or more spatially separated cameras. Robots can estimate depth information by analyzing the differences between picture points acquired by each camera. Robots can also use other depth technologies such as time of flight or structured light. With enhanced depth awareness, robots can seamlessly perform tasks like navigation, path planning, and obstacle detection.

Role of Cameras in Object Recognition and Classification

Object recognition and classification are essential components of vision-guided robotics. With these learning algorithms, camera-enabled robots can achieve remarkable precision and perform cognitive tasks including sorting, cutting & sawing, etc. Let’s examine each component in depth with examples.

Object Recognition Algorithms Using Machine Learning

Object recognition has been revolutionized by machine learning algorithms, particularly deep learning techniques such as convolutional neural networks (CNNs). A robot, for example, could be taught to differentiate between various varieties of flowers.

Take the case of a camera-equipped delivery robot that must recognize various road signs. The robot can reliably categorize and recognize stop signs, yield signs, speed limit signs, and more by training a convolutional neural network (CNN) on traffic sign images. This allows the robot to obey traffic laws and navigate through hazardous situations.

Using Camera Data to Train More Accurate Models

Cameras record visual data that functions as training material for object recognition models. The camera dataset’s quality and variety are essential for successful model training. Robots can enhance the model’s capacity to generalize and recognize items effectively by exposing it to various object cases, including differences in lighting, angles, and backdrops.

Imagine a robot in an inventory setting identifying various product categories on cabinets (often called inventory tracking robots). The camera on the automaton captures images of various objects from various perspectives and lighting conditions. Using this information to build a machine-learning model, developers can enable robots to properly categorize and distinguish between various items, such as canned food items and housekeeping supplies.

Using Cameras for Real-time Object Recognition and Categorization

We already discussed how object recognition works with the help of cameras and ML-based algorithms.

Robots can recognize and categorize objects in real time as well using cameras. They can instantly recognize and find items inside the camera’s field of vision – thanks to efficient algorithms like YOLO (You Only Look Once) or SSD (Single Shot MultiBox Detector).

In dynamic settings, real-time ability is invaluable for robots like goods-to-person robots, pick-and-place robots, and patrol robots.

Consider a robot at a manufacturing plant that detects and sorts various objects on a conveyor belt. The robot can instantly recognize each component as it passes past the camera using real-time object identification and classification algorithms, allowing for precise sorting into the relevant categories. While object identification can be done using RGB cameras, the distance to the objects for accurately picking and placing them can be calculated with the help of a depth camera.

Integrating ML & AI with a Camera for Advanced Recognition

A robot with cameras and AI integration can identify and comprehend human gestures, making it easier for humans to interact with it naturally and intuitively. The robot can recognize facial expressions, enabling it to react accordingly to various emotional signals from people. This can be very useful in telepresence robots used for telehealth, video conferencing, and remote operations.

Improving Accuracy with Camera-guided Robot Manipulation

The use of cameras and visual feedback to improve the precision, accuracy, and control of robotic manipulation responsibilities is referred to as camera-guided robot manipulation. Robots can execute complex grasping and manipulation tasks with greater efficiency and adaptability by utilizing techniques such as visual servoing, hand-eye coordination, and adaptive control strategies. Let us explore these in detail.

Hand-eye Coordination via Visual Servoing

Visual servoing is a technique that controls the movement and position of a robot’s end effector using visual feedback from cameras. The robot can continuously alter its motion to accomplish the desired task by comparing the desired visual characteristics to the present camera images. This method permits immediate control and accounts for inconsistencies in the robot’s movements or the object’s position. This fine control and coordination of the robot’s actions through visual servoing provides robotic hand-eye coordination.

To understand this better, consider a situation where a camera-equipped robotic arm must retrieve an item from a cluttered table. The robot’s gripper is properly aligned with the object’s location and orientation, thanks to visual servoing, which employs depth cameras to achieve this (sometimes this might require a combination of RGB and depth cameras depending on the complexity of the use case). Even if the object’s beginning position is unknown or varies throughout the task, the robot can modify its actions to achieve a successful grab using visual signals.

Object Grasping and Manipulation with Visual Feedback

Robots can use cameras to receive live visual information, which helps them adjust their grasping and manipulation techniques according to the object’s appearance and the surrounding conditions. Robots can use visual data analysis to determine the best way to grasp objects, adjust their grip, and manipulate objects of different shapes, sizes, or textures. For example, think about a robotic arm with a camera that picks up various things from a container. The camera offers visual feedback, enabling the robot to analyze the forms and sizes of the objects. Based on this information, the robot can decide on suitable grasping strategies for various items, such as a two-finger pinch or a parallel gripper, assuring a stable grasp and effective manipulation.

Adaptive Control Techniques

Cameras make adaptive control techniques possible where the robot adapts its real-time behavior depending on camera inputs. Robots can modify their control settings, trajectories, or gripping forces depending on changes in the surroundings by continually monitoring the visual input. This adaptability improves the robot’s resilience and flexibility while performing various manipulation tasks. For instance, consider a robotic arm equipped with a camera that is charged with moving items on a conveyor belt. The camera gives constant visual data, enabling the robot to alter its grab force depending on the item’s estimated weight and adjust its trajectory if the object’s location changes. The adaptive control method enables effective manipulation even in dynamic and uncertain situations.

Use Cases of Embedded Cameras in Vision-guided Robotics

Cameras are important in vision-guided robotics, allowing robots to perceive and interact with their surroundings. Here are some important camera-enabled use cases in vision-guided robotics:

Localization and Mapping

Cameras are utilized for robot localization and mapping, enabling them to comprehend their position and localize themselves in their environment. By analyzing camera images and employing techniques such as simultaneous localization and mapping (SLAM), robots can generate maps of their surroundings and determine their location within the map.

Navigation and Obstacle Avoidance

Cameras assist robots with safe navigation and obstacle avoidance. Navigation can be either guided or completely autonomous. The former involves cameras reading barcodes or markers placed on the floor or surrounding walls to move from one point to another. The latter leverages stereo, time of flight, or structured light technologies to move autonomously without any form of guidance. Such robots are called autonomous mobile robots or AMRs. Both require cameras – only that the type of camera will change depending on the method of navigation.

Regarding obstacle avoidance, it can be done using a depth camera or a single RGB camera. The complexity of the use case determines the choice between the two.

Defect Detection and Quality Inspection

Cameras are used in industrial operations to check for quality. Camera-equipped robots can take extensive images of products and components, allowing them to conduct visual inspections and discover defects or abnormalities. This guarantees constant quality control while also reducing the need for human inspections.

Human-robot Interaction and Safety

Cameras allow robots to interact with people efficiently and safely.

Robots use cameras to recognize and follow human motions, facial expressions, and body language, allowing for natural interactions.

Cameras also help to improve safety by allowing robots to recognize and react to the presence of people, reducing mishaps or collisions. This is applied in different types of robots, including companion, telepresence, and goods-to-person robots.

Augmented Reality and Virtual Reality

Cameras are combined with augmented reality (AR) and virtual reality (VR) technology to deliver immersive experiences and improve robot perception. Cameras record real-world pictures that may then be mixed with virtual components, allowing robots to communicate with simulated objects and improving their abilities in simulation, training, and entertainment applications.

TechNexion's Contribution to the Development of Vision-guided Robotic Systems

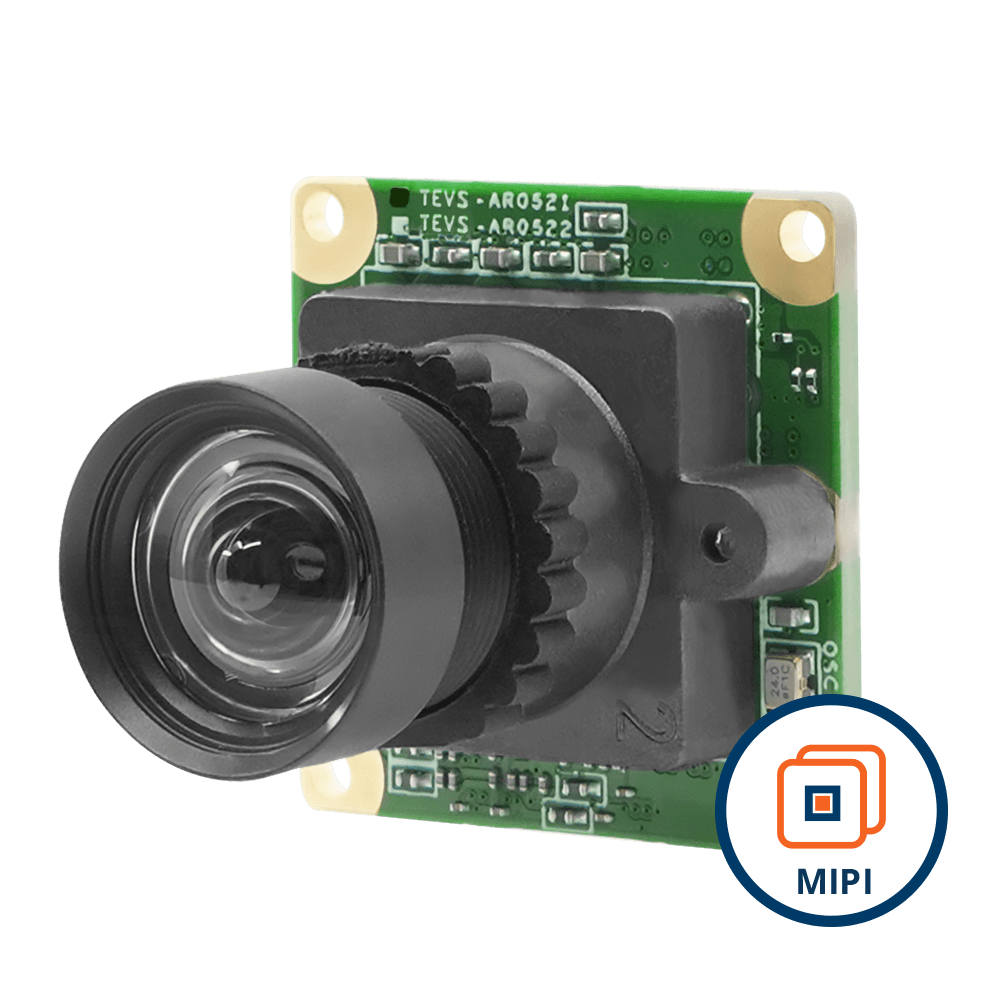

We offer high-quality cameras designed specifically for vision-guided robotics. These cameras offer high-resolution imaging, low-light capabilities, and rapid frame rates, ensuring reliable and accurate visual data acquisition.

- We develop embedded vision cameras that can be readily integrated with processors and robots. They are also compatible with the ROS (Robotic Operating System) – ensuring fast integration.

- Our cameras can be used for Sensor Fusion and Integration (with GPS, LiDAR, millimeter-wave radar, etc.) to give robots a comprehensive understanding of their environment, thereby improving perception, obstacle avoidance, and localization capabilities.

- Our cameras are capable of capturing images and videos required for AI-based analyses. Robots can perform complicated visual tasks directly at the periphery using these cameras and potent computational units, enhancing their real-time decision-making capabilities.

- We provide software support, such as camera drivers, SDKs, and APIs, to facilitate the integration of cameras into robotic platforms. This expedites the development and deployment processes by ensuring seamless communication between camera and robot control systems.

- We also offer customization options and consultation services to meet the specific needs of your vision-guided robotics project, guaranteeing optimal performance and compatibility by recognizing the specific challenges.

By leveraging TechNexion’s expertise, developers and integrators can unlock cameras’ full potential in revolutionizing automation, perception, and interaction in robotics.

Related Products

- Role of a Camera in Perception Enhancement

- Role of Cameras in Object Recognition and Classification

- Object Recognition Algorithms Using Machine Learning

- Using Camera Data to Train More Accurate Models

- Using Cameras for Real-time Object Recognition and Categorization

- Integrating ML & AI with a Camera for Advanced Recognition

- Improving Accuracy with Camera-guided Robot Manipulation

- Hand-eye Coordination via Visual Servoing

- Object Grasping and Manipulation with Visual Feedback

- Adaptive Control Techniques

- Use Cases of Embedded Cameras in Vision-guided Robotics

- Localization and Mapping

- Navigation and Obstacle Avoidance

- Defect Detection and Quality Inspection

- Human-robot Interaction and Safety

- Augmented Reality and Virtual Reality

- TechNexion's Contribution to the Development of Vision-guided Robotic Systems

- Related Products

Get a Quote

Fill out the details below and one of our representatives will contact you shortly.