Imagine a robot navigating through a warehouse, effortlessly picking and placing items with precision, avoiding obstacles, and adapting to changing environments—all without human intervention. What makes this possible? The answer lies in depth perception, a crucial aspect of robotic vision systems. Just as human eyes perceive the world in three dimensions, advanced robotic systems rely on depth perception to interpret spatial relationships, distance, and object positioning.

For decades, robots were limited to working in controlled, 2D environments, requiring exact coordinates to function. But now, with the integration of depth sensors, stereo cameras, and vision algorithms, robotics has entered a new era. From autonomous vehicles to industrial automation, depth perception enables robots to “see” the world in 3D. This allows for greater autonomy, accuracy, and decision-making capabilities.

This blog post delves into how depth perception works in robotic systems, the technologies driving it, and its revolutionary impact across industries.

What is Depth Perception? Why is it Important in Robots?

Depth perception refers to the ability to determine the distance and spatial relationship between objects in an environment. In humans, it’s a natural function of our binocular vision, where each eye captures a slightly different view of the world. The brain, then, processes these images to create a 3D understanding.

In robotic systems, depth perception mimics this process through sensors, cameras, and complex algorithms. These combined allow machines to understand their surroundings in three dimensions, rather than just width and height.

An Autonomous Car

For robots, depth perception is vital because it transforms them from simple, task-executing machines into dynamic, problem-solving entities. Without it, robots would struggle to accurately interact with objects in complex or unpredictable environments.

Consider a self-driving car—without depth perception, it would struggle to navigate roads effectively. It would rely solely on GPS coordinates and basic sensors, making it unable to judge the distance between vehicles, pedestrians, or road hazards. This limitation would prevent it from making critical real-time decisions, such as adjusting its speed when another vehicle suddenly brakes or safely merging into traffic.

In essence, depth perception is the foundation of robotic autonomy. It allows robots to perceive their environments more like humans do. It makes them adaptable and capable of functioning effectively in complex, unstructured environments across various industries.

Use Cases of Depth Sensors in Robotics

Let’s explore the various use cases of depth perception and how it enhances robotic capabilities.

Collision Avoidance

One of the fundamental uses of depth sensors in robotics is collision avoidance. Depth sensors allow robots to detect objects in their path and calculate the distance between themselves and potential obstacles.

By continuously analyzing their surroundings in real-time, robots can predict and avoid collisions, making safe and efficient movement possible. This is especially critical for robots that operate in dynamic environments, where they must interact with objects or people.

The ability to measure distances in real time helps robots respond proactively to obstacles, adjusting their paths to ensure smooth and collision-free operation.

Object Detection

Object detection is another critical function enabled by depth sensors. By perceiving objects in three dimensions, robots can identify, classify, and localize items in their environment. Depth sensors provide detailed spatial data, allowing the robot to distinguish between objects based on their size, shape, and position.

This ability is key for tasks such as picking and placing items, recognizing objects for sorting, or interacting with tools and equipment. Depth perception enhances the robot’s understanding of its environment. It improves the robot’s overall ability to interact with objects accurately and efficiently.

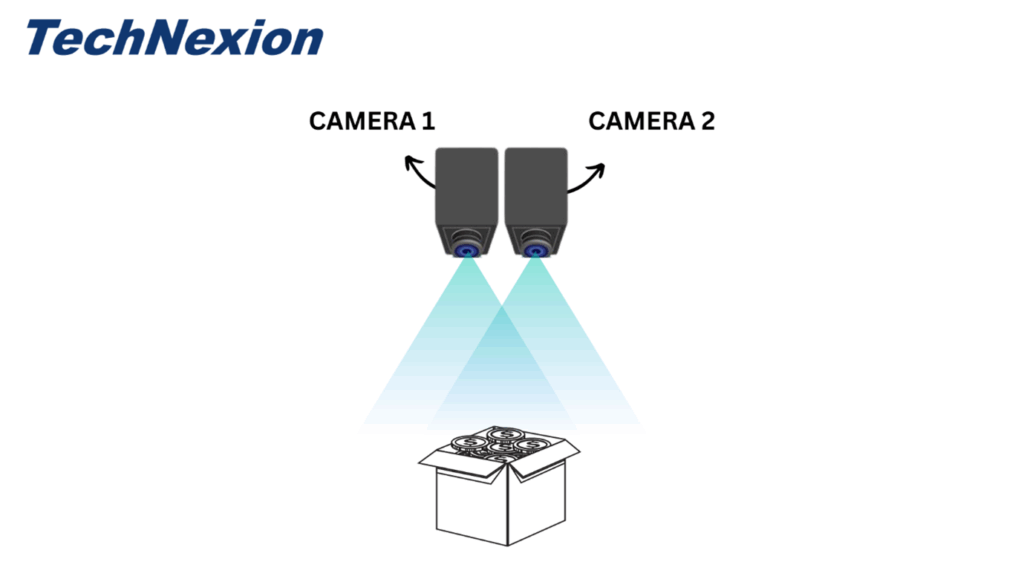

Volumetric Measurement

Volumetric measurement involves determining the size, volume, or dimensions of an object or space. Depth sensors enable robots to perform these measurements with precision by capturing 3D data points from multiple angles. This capability is crucial in industries where the size and shape of objects must be assessed, whether for quality control, packaging, or space management.

Depth sensors allow robots to quickly and accurately measure the volume of irregularly shaped objects or environments, providing valuable data for decision-making and automation processes. This eliminates the need for manual measurement and increases operational efficiency.

SLAM Tracking (Simultaneous Localization and Mapping)

SLAM tracking is a complex task that allows robots to build a map of an unknown environment while simultaneously tracking their position within it. Depth sensors are crucial for SLAM because they provide the detailed 3D data necessary for mapping and localization. Robots can identify key features in the environment, track their movement in relation to these features, and construct a real-time map.

This capability is essential for autonomous robots, allowing them to navigate and explore unfamiliar spaces without relying on pre-programmed paths. SLAM enables robots to operate in dynamic or unknown environments with increased autonomy and flexibility.

Types of Depth Sensors Used in Robots

Let’s explore some of the most commonly used depth sensors in robotics: LiDAR, structured light sensors, and stereo vision cameras.

LiDAR (Light Detection and Ranging)

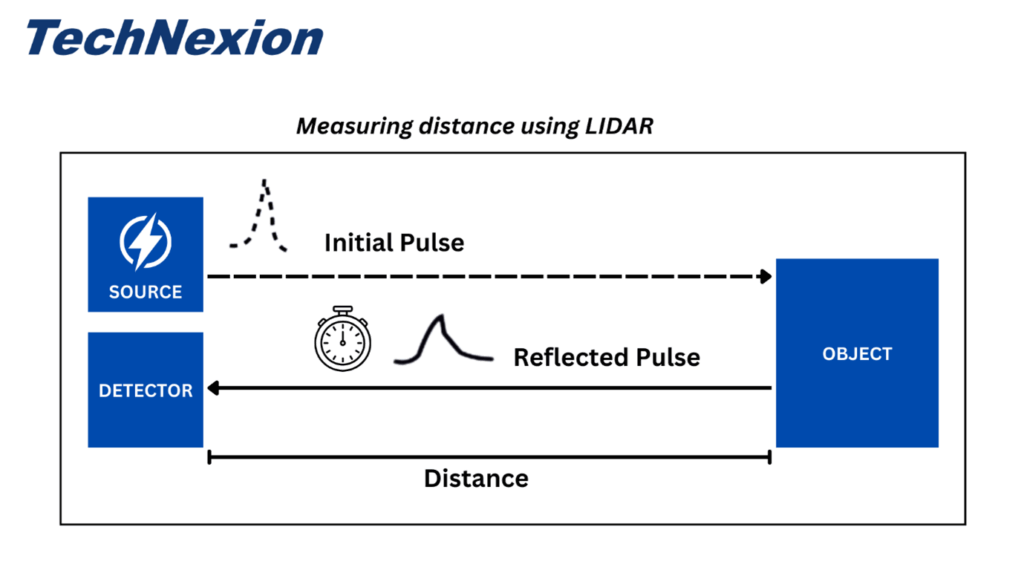

LiDAR is one of the most widely used depth-sensing technologies in robotics, especially in autonomous vehicles and drones. It works by emitting laser pulses and measuring the time it takes for these pulses to reflect off objects and return to the sensor. Based on this time, the system calculates the distance between the sensor and the object, creating a precise 3D map of the environment.

Components of LiDAR:

- Laser Emitter: Projects laser pulses at the target area.

- Receiver (Photodetector): Captures the reflected light pulses.

- Scanner: Typically integrated with a rotating scanning mechanism, such as a spinning mirror or similar system, allowing the laser beam to sweep across the environment and achieve a full 360-degree field of view.

- Processor: Calculates the distance by measuring the time delay between the laser pulse being emitted and reflected back.

How LiDAR Works:

When the laser beam hits an object, it reflects back to the receiver, and the time taken for the light to return is measured. Since light travels at a constant speed, the system can compute the distance using the formula:

distance = (speed of light × Round-Trip Time) / 2

The result is a 3D point cloud—a collection of data points that represent the surrounding environment. LiDAR can generate a 360-degree view that is accurate to the centimeter. Plus, it works with low latencies and isn’t affected by ambient light or temperature.

LiDAR is particularly effective in outdoor environments and over long distances. This makes it essential in applications like autonomous driving and drone navigation.

However, LiDAR’s performance can degrade in foggy or rainy conditions, as light can scatter, causing inaccurate readings. Nonetheless, its ability to generate precise 3D maps in real time has made it a leading choice for depth sensing in robotics.

Working principle of LiDAR

Structured Light Sensors

Structured light sensors work by projecting a known pattern—typically a grid or stripe—onto the environment. When this pattern hits an object, it becomes distorted based on the object’s shape and distance from the sensor. By analyzing how the pattern deforms, the system calculates depth information and creates a 3D model of the environment.

Components of Structured Light Sensors:

- Projector: Emits the structured light pattern, often using an infrared light source to minimize visible light interference and enhance the stability and accuracy of depth sensing.

- Camera (Sensor): Captures the reflected and distorted pattern.

- Processor: Analyzes the distortion of the pattern to determine depth.

How Structured Light Sensors Work:

The system projects a structured light pattern onto the scene. As the pattern interacts with objects, it distorts, and the camera captures this deformation. The processor then compares the captured image with the original, undistorted pattern. It then uses triangulation to compute the depth of each point.

Structured light sensors are highly accurate for short-range depth sensing and are commonly used in applications like 3D scanning, facial recognition, and gesture tracking. However, these sensors perform best in controlled environments with little ambient light. They may face challenges outdoors where sunlight can interfere with the pattern.

Working principle of stereo cameras

Stereo vision is advantageous because it operates passively without requiring an active light source, making it well-suited for outdoor environments. It also performs well in complex scenes, particularly with textured objects. However, it struggles with featureless surfaces such as blank walls or glass, where finding corresponding points is difficult. Additionally, real-time disparity computation can impose a significant processing load on edge devices, requiring a careful balance between performance and power consumption.

The Power of Combining Embedded Cameras and 3D Cameras

By combining 2D embedded cameras and 3D sensors like LiDAR, vision systems can function even more effectively. For example, consider a car that is partially autonomous. It modifies its course in response to obstacles it detects using LiDAR, such as people and other cars on the road. In order to make sure they don’t have any blind spots when using manual navigation, the driver will also require camera modules for surround view.

Here, it is the combination of 2D and 3D cameras that allows the car to utilize its full potential by enhancing safety and functionality. Similarly, robots and smart surveillance systems will also find the integration of 2D and 3D imaging useful.

Robotic Applications Where Depth Perception is Used

Below are some of the key robotic applications that heavily use depth perception to enhance performance and operational efficiency.

Warehouse Robots

In the modern era of e-commerce and fast-paced supply chains, warehouse robots have become indispensable. These robots are used for tasks such as sorting, picking, and moving goods. They are often deployed in large, dynamic environments where depth perception is essential for safety and productivity.

Depth sensors, like LiDAR and stereo vision cameras, enable warehouse robots to perceive obstacles, shelves, and moving objects in real-time. With accurate depth data, robots can autonomously navigate through narrow aisles, avoid collisions with humans or other machines, and ensure they pick items from the correct locations.

Additionally, depth perception allows warehouse robots to stack items efficiently and load them into containers based on their volume, shape, and weight, optimizing the space used in the warehouse.

For example, when a robot retrieves an item from a high shelf, it needs to understand the object’s exact distance, orientation, and volume to successfully grab and transport it without damaging the product. This capability, made possible by depth perception, significantly improves warehouse efficiency and reduces operational errors.

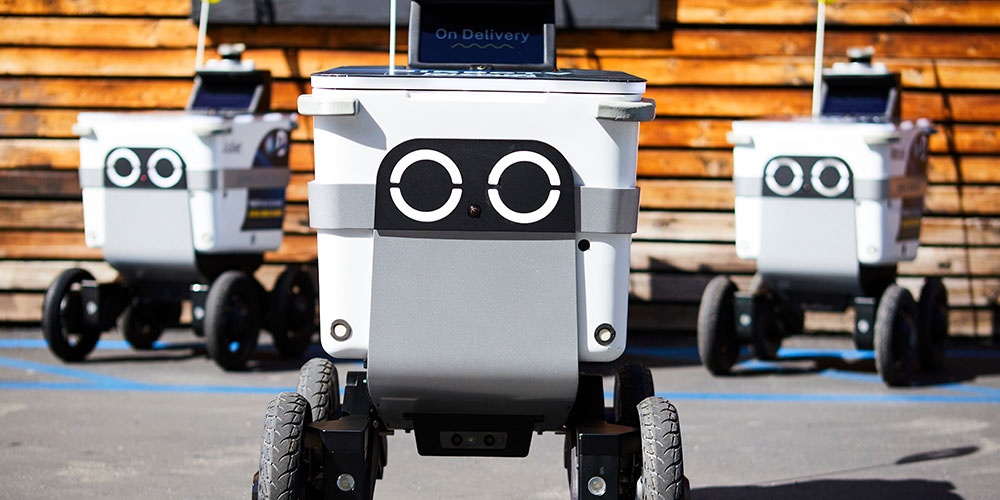

Delivery Robots

The rise of delivery robots is transforming the last-mile delivery process. These robots are designed to deliver goods—ranging from groceries to parcels—directly to consumers. Whether they are navigating sidewalks, crossing streets, or entering buildings, delivery robots rely on depth perception to move safely and efficiently in complex, unpredictable environments.

Depth sensors help these robots detect obstacles, pedestrians, and vehicles, allowing them to adjust their path accordingly. For example, when approaching a crowded sidewalk, a delivery robot equipped with LiDAR or stereo vision can calculate the distance between itself and pedestrians. It can, then, adjust its speed and path to avoid collisions.

Delivery Robots

Depth perception is also vital when the robot needs to climb stairs, maneuver through narrow spaces, or enter elevators—scenarios that require detailed spatial awareness to ensure smooth operation.

Furthermore, depth sensors help delivery robots accurately assess drop-off points. Whether it’s a doorstep, mailbox, or designated delivery zone, the packages are left in the correct location.

Patrol and Security Robots

Security and patrol robots are being increasingly deployed in public spaces, corporate offices, and even residential areas to monitor, detect, and respond to security threats. Depth perception plays a pivotal role in enabling these robots to perform their tasks autonomously while maintaining a high level of situational awareness.

Equipped with depth sensors, patrol robots can scan their environment for unusual activity, detect intruders, and identify potential threats based on movement and distance. For example, a security robot patrolling a parking lot at night can use depth sensors to identify unauthorized vehicles, monitor individuals’ movements, and even recognize suspicious objects left behind.

Depth perception also enables these robots to navigate complex environments, such as multi-floor buildings or outdoor areas with varying terrain. By accurately mapping their surroundings, they can move between different zones, identify hidden spaces, and even recognize blocked paths that may indicate suspicious activity.

Agricultural Robots

An agricultural robot

Agriculture is another industry that is seeing rapid adoption of robotic systems, with depth perception playing a crucial role in enhancing the precision and efficiency of farming tasks. Agricultural robots, also known as agri-bots, use depth sensors to perform activities such as planting, harvesting, weeding, and monitoring crops.

For instance, robots involved in harvesting need to assess the size, shape, and position of crops in order to harvest them efficiently. Depth perception enables these robots to differentiate between ripe and unripe produce by analyzing the object’s 3D characteristics.

With the help of structured light sensors or stereo vision cameras, agricultural robots can also navigate fields, avoid obstacles like rocks or uneven terrain, and precisely apply fertilizers or pesticides only where needed.

Autonomous Drones

Autonomous drones are used across industries for tasks such as surveying, mapping, infrastructure inspection, and even delivery services. Depth perception is critical to the operation of drones, allowing them to avoid obstacles, maintain stable flight, and interact with objects in 3D space.

Drones equipped with LiDAR, structured light sensors, or stereo cameras can create detailed 3D maps of the terrain below them. This can enable applications like aerial surveying and monitoring.

For example, when inspecting power lines or wind turbines, drones use depth sensors to measure the distance between themselves and the infrastructure, ensuring that they can perform close-up inspections without collision.

Depth perception also allows drones to navigate autonomously through challenging environments. For example, forests or urban areas with tall buildings, where GPS alone is insufficient for precise navigation.

TechNexion – Cameras for Robotics Applications

TechNexion offers cutting-edge embedded vision cameras tailored for robotics applications. With advanced features such as high resolution, HDR, global shutter, and excellent low-light performance, these cameras are ideal for AI-driven robotic systems.

They support a variety of interfaces and are designed for easy integration into diverse computing platforms, enabling smooth deployment in complex robotic environments. Additionally, TechNexion provides ready-to-integrate system-on-modules equipped with essential features for real-time vision processing and control.

Contact us to learn more about how our vision solutions can help you accelerate development and performance.

Related Products

- What is Depth Perception? Why is it Important in Robots?

- Use Cases of Depth Sensors in Robotics

- Types of Depth Sensors Used in Robots

- The Power of Combining Embedded Cameras and 3D Cameras

- Robotic Applications Where Depth Perception is Used

- TechNexion â Cameras for Robotics Applications

- Related Products

Get a Quote

Fill out the details below and one of our representatives will contact you shortly.