In today’s fast-paced digital world, where instant connectivity and real-time communication are paramount, the demand for efficient streaming solutions has never been higher.

Whether it’s live video streaming, remote surveillance, or autonomous navigation, low-latency cameras have emerged as a game-changer. With their lightning-fast response times, these cameras are revolutionizing how we capture and transmit visual data.

But what exactly is low latency streaming in embedded vision? And why is it so relevant in today’s world? Let’s find out.

What is Latency? Why is Low-latency Streaming Important?

Latency refers to the time it takes to send and receive data across a network. The term “latency” describes how long it takes for data to move from source to destination. Typically, latency in an embedded vision system is the time measured between a change in front of the camera (in its field of view), and when the captured data is displayed. However, this is too simple a definition as the display of video data is only one of a multitude of use cases for low-latency camera streaming. In reality, the other end of the measurement is not necessarily when the image is displayed, but when the image is useful for application processing.

Every step of the image transfer process, including picture capture, compression, transmission, decompression, and display, contributes to the overall end-to-end latency.

In real-time control applications, such as collision avoidance, or real-time navigation, the pipeline is simplified as the video data is processed directly by the embedded device without the need for compression, network streaming, or decompression, and can often benefit from zero-copy transfer techniques.

Consider the several important phases influencing end-to-end latency when designing an embedded vision system.

- The first source of delay is the time required to capture and process camera images. At this point, the unprocessed, raw camera feed is transformed into a format that is available for processing.

- Next, the data must be transferred from the camera sensor to the host processor memory. From there, it becomes available for additional processing, including compression, or application-specific processing.

- If the use case requires streaming, the delay caused by network transmission comes next. There will always be delays as the video data traverses different network components.

- Lastly, latency is imposed by the client buffer decoder and display procedure on the receiver side before the image is displayed.

When developing your embedded vision system, it is crucial to carefully consider all of these to ensure maximum system performance and achieve the desired latency. Many embedded vision applications need low latency, especially machines that must make real-time decisions (say a warehouse robot). Low-latency camera streaming enables instantaneous image capture, transmission, and reception.

Examples of a few other embedded vision applications where low latency streaming is critical are:

- Autonomous or semi-autonomous industrial vehicles (like forklifts, tractors, mining vehicles, heavy construction equipment, etc.).

- Surround-view systems used in trucks and automobiles to eliminate blind spots

- Delivery drones that fly autonomously

- Autonomous checkout systems

- Telepresence robots used for remote operations

How is Latency Measured?

Measuring latency, usually expressed in seconds or milliseconds (ms), can be tricky. Accurate latency measurement requires the camera’s clock to synchronize perfectly with the display device’s clock. One way to calculate latency with minimal deviance is using the timestamp overlay text feature.

This method measures the end-to-end delay of a camera-based system, which is the time between when the lens captures a picture frame and when it is displayed on a device for monitoring.

Since the timestamps used for latency computation are only obtained during frame capture, an inaccuracy of one frame interval is possible. The frame rate, therefore, constraints computing delay.

As a result, this technique is not suggested for slow frame rates. Also, it is not suggested if the measurement accuracy requirement is less than one frame interval.

Another measurement technique is to use a program to force a change to the field of view of the camera (such as flashing a light or LED), and then to monitor the time required for that change to be seen in the video feed from the camera. This is something that we showed how to do in one of our video tutorials.

The advantage of this approach is that you completely remove the network latency aspect of the overall latency measurement and focus on the latency of the camera/embedded processing system itself. In addition, as you are not using an external display or measurement device, this technique is easily repeatable and useful for upgrades to software to ensure that functionality isn’t negatively impacted by software updates.

Factors Causing Latency in an Embedded Camera

Some factors that can cause latency in cameras are:

- Important processes, including autofocus, autoexposure, white balance correction, and image stabilization, are handled by internal processing pipelines in cameras. These methods take time to investigate, make the required corrections, and then capture an image. Based on the camera type and the intricacy of the image, the degree of delay produced by these operations will vary. Automatic exposure control (AEC) can be a significant factor, because in low-light conditions, the frame interval can significantly lengthen to increase integration time, and this directly affects read-out latency from the camera.

- Image enhancement is subject to latency. Cameras utilize numerous techniques to improve the image. These techniques include noise reduction, gain, dynamic range adjustment (HDR), and tone mapping. The camera must analyze the captured image data, which can result in an additional delay before the enhanced image can be displayed or transmitted to implement these enhancements.

- Embedded vision systems typically employ various techniques for compression, such as H.264 or H.265, to reduce the size of video data for transmission, downstream video processing, and storage. The video frames are examined, redundant parts are eliminated and then encoded in a compressed form. This is called spatio-temporal compression. The time and processing needed to achieve higher compression ratios increase the delay before the compressed data is ready for transmission.

- Before being sent or processed further, some camera systems employ a buffer to store a certain quantity of video data. It acts as a storage reserve for processing or as a means of adjusting data flow variations (this technique is used in applications like photogrammetry where large amounts of data need to be transmitted). The size of the buffer and the period it takes to fill it might cause latency since there is a lag between when the video is captured and when it is available for transmission or processing.

- Cameras that record both video and audio may suffer audio delay. Before audio signals can be sent or stored, they must be synced with visual frames, processed, and encoded. Due to the time necessary for signal processing, synchronization, and encoding, these activities add delay.

- The network architecture significantly influences the total latency of streaming. Due to the physical constraints of data transmission, the distance between the camera and the streaming destination might cause a delay. High traffic or restricted capacity on a network can lead to network congestion, which can cause packet delays and queuing.

- When a device or software receives the camera’s video stream, additional latency can be introduced during the decoding and rendering process. Overall latency can be influenced by factors such as the device’s processing capabilities, the buffers’ sizes, and the network conditions on the receiver’s end. On the receiving end, delays can happen due to the time required to decode the compressed video stream, synchronize the audio and video, and render the frames for display.

Methods to Reduce Latency in Embedded Cameras

When it comes to low-latency camera streaming, several key factors can greatly impact the overall performance. Let’s take a closer look at some of these factors:

- Network Bandwidth: Having sufficient network bandwidth is crucial for achieving low-latency camera streaming. A high-speed and stable internet connection ensures that data can be transmitted quickly between the camera and the streaming destination without significant delays.

- Network Latency: Network latency refers to the time taken by data packets to travel from the camera to the streaming destination and back. Lower latency is essential for real-time streaming, as high latency can result in delays and buffering, leading to increased latency in the video stream.

- Encoding and Compression: Streaming video with low latency requires effective encoding and compression algorithms. By utilizing modern video compression codecs like H.264 or H.265, the size of video data can be significantly reduced, enabling faster transmission. Furthermore, implementing hardware-accelerated encoding can further decrease latency. If bandwidth is not a concern, then pure spatial compression techniques such as MJPEG can be used.

- Hardware and Software: Optimizing both hardware and software is crucial for achieving low-latency streaming. High-quality cameras with low processing times and efficient streaming software play a vital role in minimizing latency. Choosing the right interface and cable also helps in optimizing latency.

- Content Delivery Network (CDN): Delivery network infrastructure is crucial for efficient video streaming. The choice of server infrastructure and CDN directly impacts latency and proximity to viewers. By ensuring a well-configured CDN with multiple Points of Presence (PoPs), the stream can be delivered closer to the viewers, minimizing latency and buffering interruptions. This is very crucial in live broadcasting applications like automated sports broadcasting.

- Buffering and Playback Settings: Improve your streaming experience with optimal buffering and playback settings. The buffer size and playback settings on the receiving end play a crucial role in latency perception. While large buffer sizes may introduce delays, small buffers can result in frequent buffering interruptions. By optimizing these settings based on your streaming requirements, you can significantly reduce latency for a seamless viewing experience.

- Device and Network Capabilities: The capabilities of receiving devices, such as smartphones and computers, as well as potential network congestion, can impact latency. Older or underpowered devices may struggle to process and display high-quality video streams in real time. Additionally, network congestion, especially during peak usage hours, can introduce delays in data transmission. Ensuring seamless video streaming and data transfer is crucial for a smooth user experience.

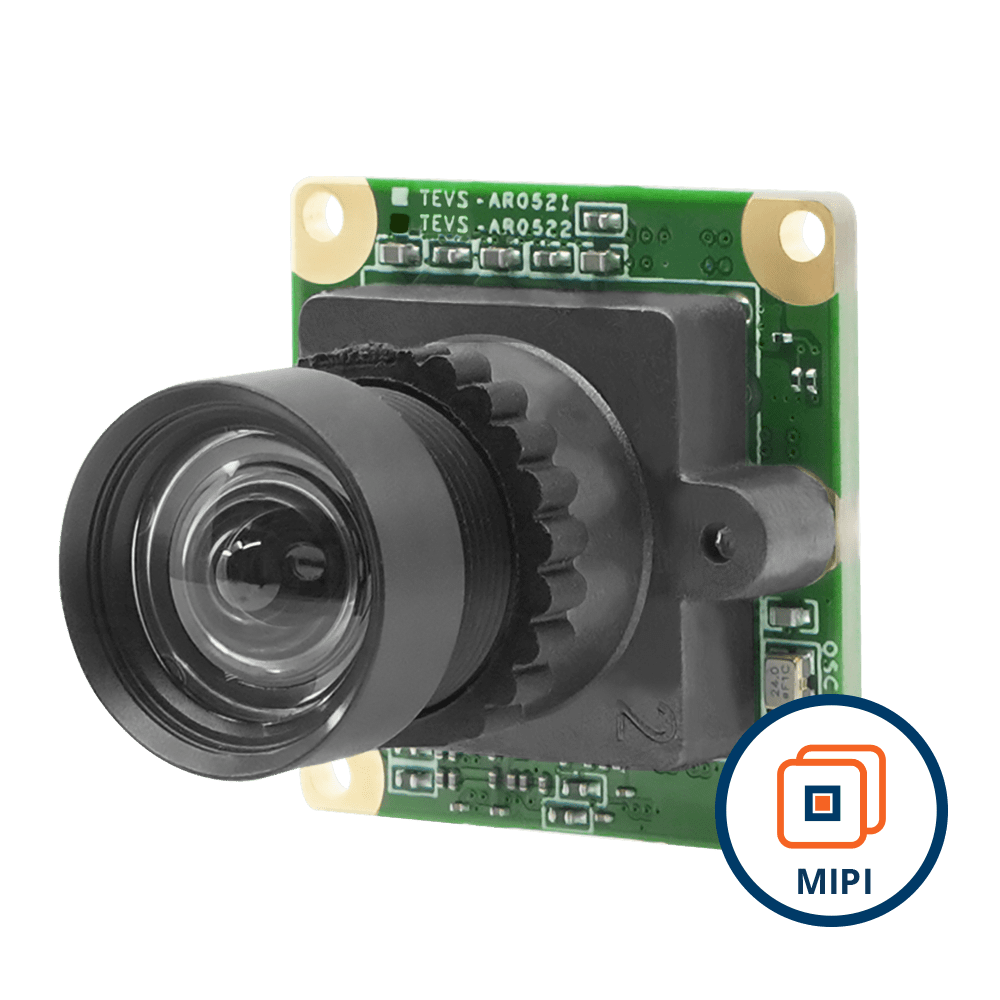

TechNexion: Offering Low Latency Cameras

Introducing TechNexion, your go-to company for cutting-edge camera solutions. With a strong focus on low latency, we specialize in developing embedded cameras and computing modules that cater to various applications, including industrial automation, robotics, automotive, and sports analytics.

When it comes to low-latency cameras, TechNexion takes pride in providing camera modules and systems that offer real-time imaging with minimal delay. We have perfected the art of achieving low latency by employing advanced technologies and optimization techniques.

Our low-latency cameras are engineered with advanced hardware optimization, firmware and software finetuning, real-time image processing, and low-latency compression. Network optimization is pivotal in our camera systems as it enables seamless integration in real-time systems. This means you can capture and transmit high-quality videos without any lag or delay – whether it’s a single camera or a multi-camera system.

What sets TechNexion apart is our dedication to customization and support. We understand every project has unique requirements, so our team works closely with you to customize the camera settings according to your needs. With features designed to optimize network speed, integration with real-time systems, and low latency compression, our cameras deliver seamless, high-speed visuals.

Experience the future of technology with TechNexion’s cutting-edge camera solutions!

FPD-Link III Aluminium Enclosed Camera with onsemi AR0521 5MP Rolling Shutter with Onboard ISP + IR-Cut Filter with C Mount Body

VLS-FPD3-AR0521-CB

- onsemi AR0521 5MP Rolling Shutter Sensor

- Designed for Low Light Applications

- C-Mount for Interchangeable Lenses

- FAKRA Z-Code Automotive Connector

- Plug & Play with Linux OS & Yocto

- VizionViewer™ configuration utility

- VizionSDK for custom development

Related Products

Get a Quote

Fill out the details below and one of our representatives will contact you shortly.