Ever wondered how we accurately map out the shape of the Earth’s surface? One of the ways this is done is by using LiDAR, which stands for Light Detection and Ranging. It is widely used by scientists to perform topographical studies to understand the texture of the earth’s surface. From hilly terrains to deserts and oceans, LiDAR has turned out to be an effective method for scientists to explore the wonders of the earth.

But is LiDAR limited to geographical and topographical studies? What about its other possibilities? Can it work alongside embedded cameras?

Let’s find out in this article. We will dive deep into:

- What is LiDAR and what is its working principle?

- Its advantages and challenges.

- Its applications and use cases in embedded vision.

- How can it work with embedded cameras?

What is LiDAR?

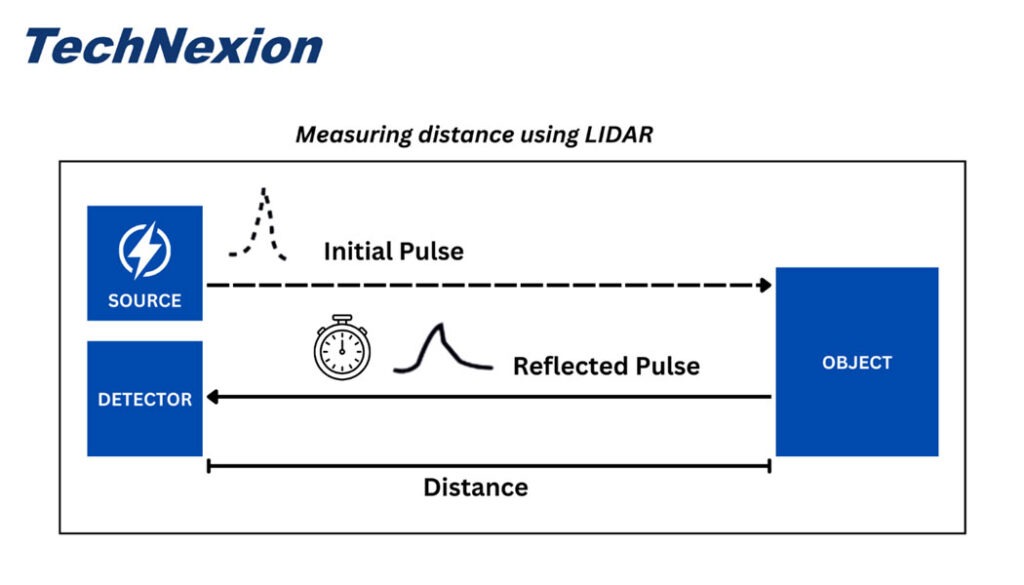

LiDAR is essentially a depth sensing technique that is used to measure the distance to and between the objects in a scene. It works by emitting a pulsed laser beam onto the object and calculates the distance to it by measuring the time the light takes to come back to the device.

The primary components of a LiDAR sensor are a light source, scanner, detector, and GPS receiver. The light source emits the light while the detector captures the reflected light from the object. The scanner is used to deflect the light to achieve a high field of view. On the other hand, the GPS receiver is used to locate the position of the carrying device or the vehicle (like an airplane or drone).

Have a look at the below image to understand the working principle of a LiDAR system.

Working principle of LiDAR

Depending on the use case, the light source can be either near-infrared or in the visible spectrum. For example, LiDAR used in autonomous vehicles mostly goes with infrared light while an airplane performing bathymetry (the process of measuring the depth of water in oceans, rivers, and lakes) typically uses green light.

In addition to the components mentioned above, a LiDAR will also come with an IMU (Inertial Measurement Unit) that helps to measure the device’s force, angular rate, and orientation.

What are the advantages of LiDAR?

One of the biggest advantages of LiDAR is its high levels of accuracy compared to other depth sensing techniques, like stereo for example. Below are a few more advantages of LiDAR:

- Speed: The speed of measuring depth is critical in many applications, especially autonomous vehicles where decision making must be done in real-time. LiDAR can sense depth in a few nanoseconds, making it an extremely effective solution for autonomous navigation.

- Ability to measure depth in complex landscapes: LiDAR can measure depth even in areas like forests – making it a feasible solution for autonomous vehicles and airplanes used for depth mapping in areas that might not be easily accessible.

- Wide industry acceptance: Owing to its accuracy and other advantages, LiDAR has kind of become the standard for depth sensing across industries such as agriculture, industrial automation, remote sensing, etc.

Challenges of LiDAR

Though LiDAR is a widely accepted technology for measuring depth and surface mapping, it comes with a few disadvantages such as:

- High initial and maintenance cost: Compared to stereo technology, the initial cost of setting up a LiDAR system can be higher. It has more components and might require more regular maintenance. These add to the total cost of the system.

- Interference from atmospheric objects can cause reliability issues when it comes to recording the reflected light.

- LiDAR is bulkier than other depth sensing devices. Since LiDAR has multiple hardware components, it might not be suitable for very compact embedded vision applications.

- Safety concerns: Some LiDARs operate at a frequency that could be harmful to human eyes. This can cause severe damage over the long term. However, modern LiDAR systems tend to use safer flashlights.

- Separating the signal and reflection: Since the reflected light comes back in the same direction as the emitted light, differentiating between the two might be challenging. Utmost care has to be taken while doing this to prevent any miscalculation.

Embedded vision applications of LiDAR

While LiDAR is extremely popular when it comes to topographic studies, its applications in the industrial and commercial sectors are game-changing. The technology has made autonomous navigation a reality with high levels of accuracy. From robots to tractors and automated forklifts, LiDAR has automated what was once seen as impossible.

Here are some of the popular embedded vision applications where LiDAR has helped make huge leaps:

- Autonomous mobile robots

- Autonomous tractors

- Automated forklifts

- Delivery drones

Autonomous mobile robots

With the advent of AI, robots have become capable of automating more and more tasks. They find applications in:

- Material handling and transportation

- Picking and placing

- Harvesting

- Teleexistence or Teleperformance

- Patrolling and security

- Product and package delivery

For all these tasks, robots can be operated manually. However, modern robots called AMRs (Autonomous Mobile Robots) use technologies like LiDAR to measure depth and navigate autonomously.

Autonomous tractors

Manually operating tractors is labor intensive, and this is challenging especially given that labor is getting expensive. In addition, there is an increasing need to improve efficiency in agricultural processes. This led to product innovators coming up with autonomous tractors. These new-age vehicles can navigate autonomously and perform functions like plowing, bug & weed detection, and even spreading fertilizers automatically.

All of these require them to have a highly accurate depth sensing system. And there is no better solution than LiDAR when it comes to this. It helps tractors measure the distance to obstacles ahead, spot the exact location of bugs & weeds, and spread fertilizer without any error.

Automated forklifts

Forklifts are used for lifting heavy materials in construction sites, factories, mines, quarries, and warehouses. They do not need to be manually operated anymore. With depth sensing technologies like LiDAR, they can do proper path planning and navigation in addition to measuring the depth of the objects to be lifted and handled.

Automated Forklift

Delivery drones

Delivery drones might sound like something from a science fiction movie. But many companies are already experimenting with them. They can deliver packages to the right destination in a completely automated manner. Unlike ground vehicles such as delivery robots, drones need to detect obstacles in all directions. This necessitates a highly accurate and reliable depth measurement technology.

LiDAR would be a perfect choice here. By using a near-infrared light source, they will also be able to ‘see’ during the night when the light supply is limited.

Alternatives to LiDAR in embedded vision

The two most popular depth sensing technologies used in embedded vision apart from LiDAR are stereo and structured light. Each comes with its own advantages and disadvantages. Let’s have a quick look at what they are.

What is a stereo camera?

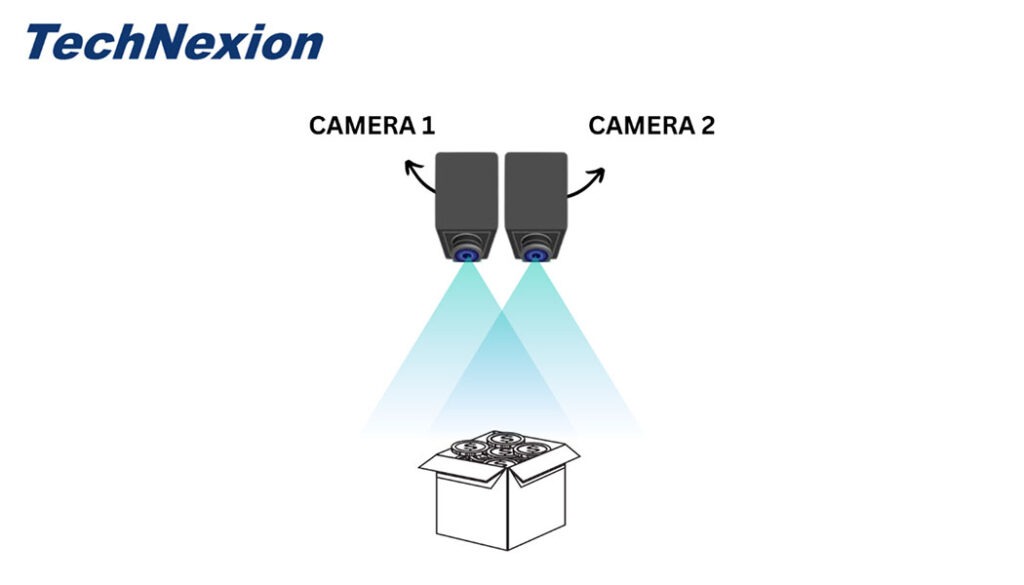

Stereo cameras are depth cameras that use the principle of the human eye to find the depth to the objects in a scene. They use triangulation of rays from multiple viewpoints to create the 3D image of an object.

The below image illustrates how this works:

Working principle of stereo

Here, the system uses 2 or more cameras to capture the 2D images of the object. Then the correlation between these images is used to calculate the depth. While stereo cameras are relatively cheaper compared to LiDAR-based camera systems, the former requires a stereo algorithm to calculate depth. This adds to the processing load of the host system.

What is a structured light camera?

Structured light technology uses a combination of cameras and light sources to measure the depth of a scene. However, unlike LiDAR cameras, structured light systems calculate depth by capturing the distortions caused by the object on the projected light.

The light might follow different patterns such as dots, stripes, or fringes. The geometrical deformation of the pattern is then used to accurately calculate the depth.

How can LiDAR work with embedded cameras?

Embedded cameras can be seamlessly integrated with LiDAR or structured light systems, and they can function side by side. While embedded cameras can capture images and videos of the surroundings, depth cameras like LiDAR and structured light systems can help in autonomous navigation.

Here, it is to be noted that embedded cameras can be coupled together as stereo pairs to form a 3D stereo camera. A structured light device also uses cameras to capture the deformations and distorted patterns that are used for depth calculation. Hence, embedded cameras are not the ‘enemies’ of depth. Rather, they can be used as depth measurement devices or can be integrated with depth sensing systems to perform other functions. For a smooth integration of embedded camera modules with LiDAR, an external trigger mechanism is recommended.

TechNexion - the premier embedded vision solutions provider

Our cameras come with features such as high resolution, NIR sensitivity, low light performance, global shutter & rolling shutter, and high frame rate, among many others. We have state-of-the-art 3D cameras in our pipeline that we will be releasing in the future. Learn more about our camera solutions here: embedded vision cameras.